AI Adoption: Not a Zero-Sum Game

Really, the prophets need to chill. This is a long game. And neither side will win.

It turns out the sky is not falling after all. Yes, a recent MIT report found that a staggering 95% of generative AI pilot projects in companies fail to deliver any bottom-line ROI (hbr.org).

Cue the breathless headlines and the stock market doing its best Chicken Little impression. But I’m here to tell you: take a deep breath. A 95% failure rate in the first inning of a technological revolution is about as surprising as rain in Seattle. In other words, it’s early. We saw this movie before, and spoiler alert: it didn’t end with technology slinking away in defeat. It ended with technology quietly conquering the world while everyone who proclaimed it “overhyped” was busy eating crow.

Lessons from the Dot-Com Bubble (or, This Isn’t My First Rodeo)

I lived through the dot-com era, when the Internet was hyped as the end of everything. Remember the late 90s? Pundits declared that the web would render physical retail obsolete, render real-world intimacy extinct, and convert all goods into digital form.

Meanwhile, skeptics swore they’d never trust this “cyberspace” thing for something as mundane as paying a bill. (Fun fact: my own father confidently told me I’d never be able to pay bills online. He was only off by, oh, everyone.) Both sides were spectacularly wrong. The truth was boring and profound: the Internet didn’t replace the physical world – it augmented it.

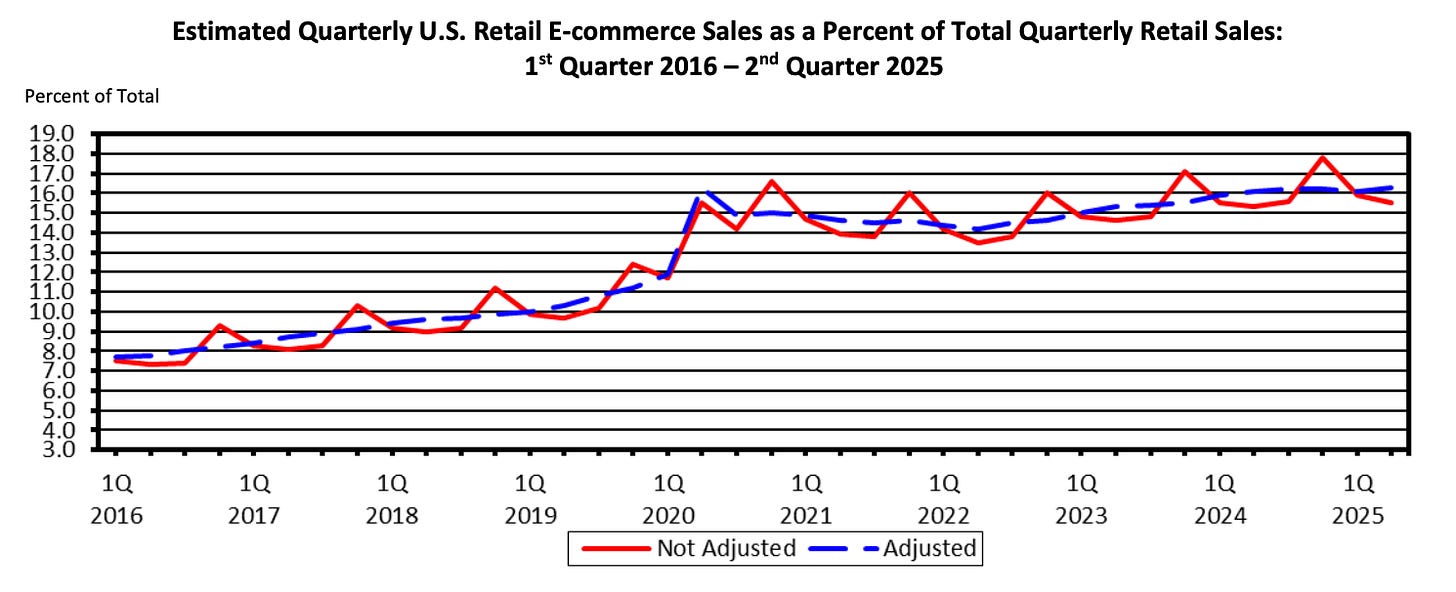

Consider retail: the Internet was supposed to kill brick-and-mortar stores dead. Fast forward to 2025, and over 80% of U.S. retail sales still happen in physical stores (livain.com). E-commerce accounts for only about 16% of retail – growing, yes, but not exactly an extinction-level event for malls. Instead, we got integration: buy online, pick up in-store; shop in-store, order online. The significant shift wasn’t replacement, it was partnership (livain.com).

So much for the prophecy that “remote shopping will flop” because “women like to get out of the house and handle merchandise.”

That 1966 TIME magazine prediction aged like milk. In reality, both women and men are plenty happy to buy things online when it’s convenient (content.time.com).

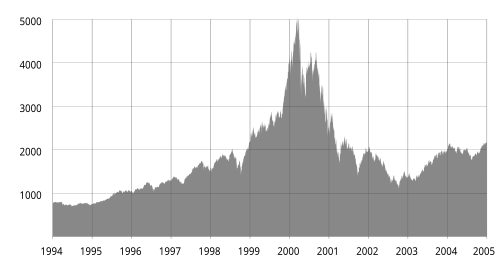

The dot-com boom itself had a notorious bust. The NASDAQ index declined by 75% between 2000 and 2002, erasing approximately $5 trillion in market value. Pets.com became a punchline. Yet out of that rubble emerged Amazon, eBay, and other survivors that went on to dominate the global economy.

Bubbles burst – but they do not tell the whole story of an era. The dot-com bubble was a bubble, to be sure, but it also heralded the internet age, which fundamentally changed how we shop, work, and socialize. Physical stores didn’t disappear; they evolved. Human connection didn’t die; it found new channels (ever heard of social media?).

And I eventually did get to pay all my bills online – sorry, Dad. I should note that my Dad was actually an early and infrequent user of TurboTax and Intuit. He did use the bank to pay vendors online, but as a check.

The lesson: early failure rates and hype cycles are not reliable predictors of long-term value. They’re more like the overly dramatic opening act that makes the eventual success story that much sweeter.

A 95% project failure rate for AI today tells me we’re in the fumbling, experimental phase.

It’s not evidence that AI is a worthless fad – any more than Pets.com’s collapse meant “the Internet is over.” In fact, it’s expected. We saw the same pattern in earlier tech revolutions, from the Industrial Revolution’s railway mania (where even Charles Darwin got swept up in wild railroad speculation to the smartphone era.

There were bubbles, panics, manias – and then there was enduring change. Every tech revolution has a bubble; historically, that’s practically a law of nature. The presence of hype (and the inevitable pop) doesn’t negate the technology’s eventual impact. Bubbles are a feature of progress, not a bug.

Humans + AI: Augmentation, Not Obliteration

Now, about those doomsayers who growl that “AI will replace all the humans.” To put it in terms my Grammy would appreciate: hogwash. AI is a tool – a powerful one, but still a tool – and tools need people. We’ve been through automation scares before.

Remember when elevators were automated and we thought elevator operators would become obsolete? Okay, they did disappear… but somehow the economy survived and new jobs emerged.

Every wave of technology automates some tasks but also creates new ones, elevating the human role to a higher level of value (or at least a different one).

The smart money (and smart people) have realized that AI is best used to augment human capabilities, not replace them outright. Sundar Pichai calls AI an enabler of human potential, not a substitute for human teachers. And former IBM CEO Ginni Rometty put it perfectly:

AI will not replace humans, but those who use AI will replace those who don’t.

In other words, the future belongs to humans who partner with AI, not those who compete with it or hide from it.

Let’s check the reality on the ground. Are we seeing mass layoffs and a total takeover by robots? Not really. According to MIT’s data, AI hasn’t (yet) led to widespread job replacement – layoffs due to AI have been limited and industry-specific. Frankly, one could argue that AI was more of an excuse for a layoff rather than a traceable outcome of replacing humans with models.

Executives are divided on whether AI will reduce headcount in the future, which is a fancy way of saying “nobody really knows yet.” Meanwhile, surveys show workers are embracing AI as a helpful colleague. One study found that 70% of people prefer AI for quick, mundane tasks, but 90% still prefer humans for complex projects that require judgment and nuance.

In other words, we’ll let the chatbot draft an email or summarize a document, but when it comes to high-stakes decisions or creative strategy, most of us still holler for a human.

Think about your own work or life. Are you using AI daily? If so, you’re not alone. The accurate measure of AI’s impact isn’t how many human jobs it replaces; it’s how many humans find it useful.

Right now, that number is skyrocketing. ChatGPT, for instance, hit 100 million users faster than any product in history and is reportedly used by around 190 million people every day.

And those are voluntary users – nobody’s forcing anyone to use a free AI assistant, people are choosing it because it adds value to their day. In many companies, employees aren’t waiting for permission either.

An MIT survey found that workers at 90% of large firms are using personal AI tools (such as a free ChatGPT account) on the job – often under the radar – while only 40% of those companies have officially purchased enterprise AI solutions.

Translation: Humans will find a way to utilize any tool that enhances their productivity, regardless of whether the IT department has approved it. Trust me, I experienced this at a Fortune 5 company.

That grassroots adoption is a leading indicator that AI is here to stay. When something becomes genuinely helpful, people vote with their feet (or clicks). By the time your workforce is secretly using AI to get work done faster, the genie is well out of the bottle.

The “AI will replace us all” trope is just the latest episode of Tech Panic Theater. Relax. AI will replace some tasks (probably the dull, repetitive ones first), but it doesn’t replicate the full spectrum of human skills anytime soon.

We’re not obsolete; we’re evolving.

In the near future, if you’re great at leveraging AI, you’ll be more valuable, not less. If you stubbornly refuse to use these tools, well, don’t be surprised when you’re outpaced by colleagues who do. This isn’t a zero-sum game of human vs. machine; it’s about humans who harness machines vs. humans who don’t. As a character on a TV show once said (handsome guy, presidential vibe): “What’s next?” The ones embracing AI are already figuring that out.

The New Breed of Coder (No More Code Monkeys)

Let’s get specific: jobs and professions will change, 100%. In fact, they already are. Take software development, a field near and dear to my heart. We’re moving from the era of developers hand-coding every line (like monks illuminating manuscripts) to an era of “vibe coding.” And no, it won’t be limited to just non-technical product managers.

For those under a rock, what on earth is vibe coding? It’s when developers focus more on what to build than on how to type it out – essentially leveraging AI to generate code while they guide the high-level design. Think of the developer as an architect or editor, and the AI as an eager junior programmer who never sleeps.

Some traditionalists harrumph at this: “But do these kids even understand the code?”

Listen, code is becoming increasingly affordable; understanding is becoming increasingly expensive.

We now have AI that can crank out functioning code faster than a roomful of interns fueled by Red Bull. That doesn’t make human developers obsolete; it makes their architecture skills more critical.

As one young engineer wrote:

“The best programmers won’t just write clean code. They’ll understand architecture, trade-offs, and system behavior. They’ll use AI like a junior dev – fast, tireless, and error-prone – but they’ll review, refactor, and own the result.”

In other words, knowing what to build and why becomes the real superpower, even if the AI handles much of the “how.”

I am an active vibe coder. I use AI tools to automate mundane tasks – such as boilerplate code, simple functions, and unit tests – so I can focus on the big picture and more complex logic. Understand an insight from that large open-source Spark codebase - sorry, but Claude beats most any day.

Do I understand the code that gets produced? You bet I do – experience and solid fundamentals matter more than ever when you’re supervising an AI sidekick. In fact, I can often build bigger, more complex systems because I’m not bogged down writing every semicolon myself.

Meanwhile, a less experienced coder trying the same tools might get lost in a sea of AI-generated spaghetti. The curmudgeons asking “But do you really understand it?” are asking the wrong question.

The right question is: does it work, and does the human overseeing it understand the system?

If yes, who cares if an AI wrote 90% of the trivial code? We’re solving bigger problems with less tedium. (And if no, well, that project was doomed whether a human or AI wrote the code.)

Mark my words: in a few years, manually writing every line of code will be about as common as manually doing long division. Sure, you can do it, and sometimes you should for learning or precision. But most of the time, you’ll let a calculator – or in this case an AI – handle the rote stuff while you provide the guidance and creativity. This doesn’t spell the end of software developers; it spells the end of software developers acting like machines. We get to be more human – more design-oriented, more creative – and let the machines do the machine work. That’s progress. Just don’t confuse using power tools with not knowing how to build; the master carpenter still measures twice, even if a nail gun does the hammering.

Bubbles, Bubbles, Toil and Trouble

Let’s tackle another buzzkill: “AI is just a bubble! It’s all hype and froth!” Well, is AI in a bubble phase? Quite possibly yes. But here’s the thing:

Has any major tech revolution not had a bubble?

Bubbles are practically a rite of passage for transformative technologies. They’re the economic equivalent of teenage acne – an annoying and ugly phase, but a sign of growth nonetheless.

History lesson: the Industrial Revolution was punctuated by wild speculative bubbles. For example, Britain’s Railway Mania in the 1840s had investors literally tripping over each other to buy railway stock, driven by hype about a world-changing technology (railroads) and dreams of untold profits.

Share prices doubled, countless new railway companies were floated on nothing but optimism and maybe a nifty route map. And yep, it crashed spectacularly. Fortunes were lost, and panic ensued. Does that mean railroads were a dud? Hardly. After the smoke cleared, the tracks still got laid, trains still transformed commerce and society, and the world moved forward (quite literally, on rails). The bubble was a footnote; the railways themselves were the story.

Fast forward to the dot-com bubble of 1999–2000 – we touched on this, which was frothy beyond belief. Companies with no profits (and sometimes no products) were Going Public for billions. When reality set in, the crash wiped out trillions of dollars in paper wealth (internationalbanker.com).

It was carnage.

But was that the “end” of the Internet? Tell that to Amazon, Google, and Facebook. Today, the survivors of dot-com mania are so successful that we’re busy worrying they have too much power. The bubble was painful for investors, sure, but it wasn’t a verdict on the technology.

It was a necessary shakeout that separated flimsy ideas from solid ones.

The Internet boom left behind real infrastructure, new business models, and a generation of people with digital skills. Similarly, the current AI gold rush may well end in a pop – with many VC darlings flopping and some cool demo products never finding a market. We might indeed see an “AI winter” of sorts if expectations race too far ahead of what’s possible in the short term. But that isn’t a death – it’s a comma in the story of AI, not a period.

If you want proof that bubbles don’t equal doom, follow the money after the bubble. After the railway bubble, railroads continued to be built. Following the dot-com crash, Internet usage continued to grow exponentially.

Right now, we’re already seeing AI woven into everyday life, bubble or not. Investors may chase fads (oh boy, do they ever – nothing like FOMO to inflate a valuation), but real adoption is driven by real utility, not stock prices. So yes, by all means be wary of irrational exuberance (and keep a pin handy for when valuations get overinflated).

But don’t conflate the froth with the ocean.

When this AI bubble, if it exists, eventually pops, we’ll lose some pet startups and paper billionaires – but we’ll be left with the tangible advancements AI brought in the meantime. The leading indicator of AI’s value isn’t how giddy VCs are feeling this quarter; it’s whether people and businesses keep using these tools when no one’s looking. And all signs on that front point to “yes.” Utility has a long half-life.

Watch What People Do (Not What They Say)

Let’s drill down on that point: the leading indicator of AI’s significance is everyday human usage. Not venture funding, not media hype, not doomsday op-eds – just plain old daily active users. If people integrate a technology into their daily routines en masse, then take it to the bank: that tech is making a dent. By that measure, AI is already performing exceptionally well.

We’ve mentioned ChatGPT’s massive user base. It’s not alone. Enterprise AI adoption, even if clumsy, is widespread. Over 80% of organizations have experimented with tools like ChatGPT or Microsoft’s Copilot, and nearly 40% have deployed them in some form (aimagazine.com). That’s in less than two years since these tools burst onto the scene.

When something is genuinely useful, you don’t have to force it down people’s throats. Remember when smartphones came out? BlackBerry to iPhone era – nobody needed to mandate “phone usage”; people lined up around the block. We’re seeing a similar organic uptake with AI assistants and automation tools. Employees are using AI to draft emails, brainstorm ideas, generate reports, and more.

Students are (sometimes sneakily) using it for homework help. Doctors are starting to use it to summarize patient notes. I even know a guy who used an AI to argue with his cable company’s customer support (the AI was very politely ruthless, I’m told). This is the stuff that doesn’t always make flashy headlines, but it’s the ground truth of adoption.

So if you’re trying to gauge where AI is going, look at the demand-side indicators:

How many people find it valuable enough that they’d miss it if it were gone?

We’ve crossed that threshold already. Entire communities of “AI power users” have emerged, sharing tips on how to craft the most effective prompts to achieve the best results. Heck, we’ve coined the term “prompt engineer,” and there are job postings for it. (Yes, we live in a world where talking to a computer really well can be a six-figure job. Take that, high school guidance counselor who told me chatting wasn’t a skill.)

AI’s daily integration into workflows is accelerating. That is why I don’t lose sleep over whether some CFO thinks the ROI of generative AI is lacking after six months (marketingaiinstitute.com).

Of course, most pilots haven’t hit ROI yet – they’re pilots! If anything, I worry more about companies moving too slow on adoption, not too fast. The real risk isn’t that AI never delivers value; it’s that you fail to figure out how it can provide value for you while your competitors do.

Because once someone cracks that code and starts using AI effectively every day, it’s hard to catch up. (Think of how some companies adapted to the internet and some didn’t – those that got it, Amazon, thrived; those that scoffed, Borders, died.) In short: watch usage trends like a hawk. That’s where the signal is, buried under all the noise.

Betting on the Right Use Cases

If you’re an entrepreneur, executive, or just a curious bystander wondering where to place your bets in the AI arena, here’s my advice:

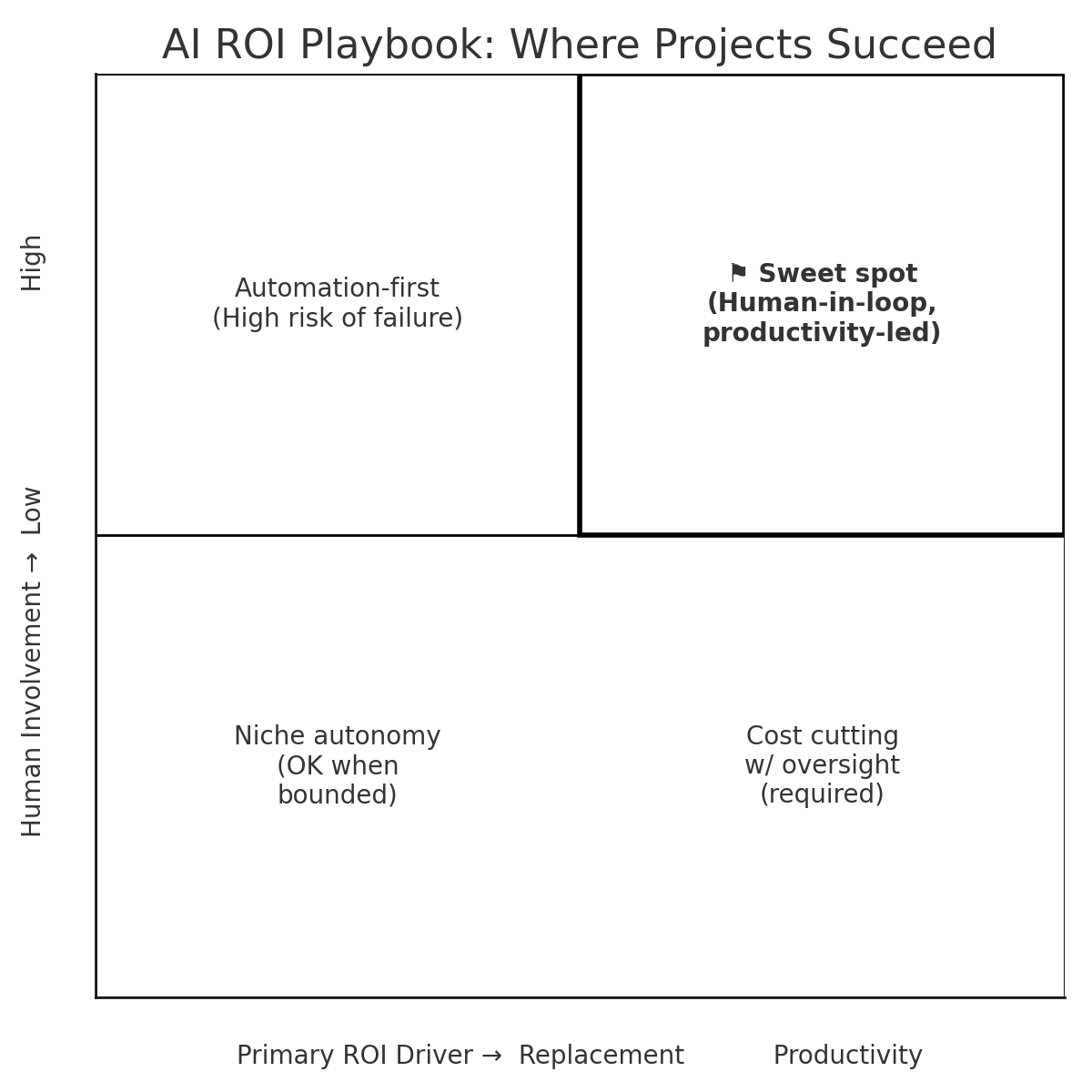

Focus on augmentation, not pure automation.

Look for areas where AI can empower humans to be more productive, rather than simply replacing humans in a zero-sum swap. In practical terms, the sweet spot for AI projects is those where:

A human is still in the loop. Aim to pair AI with human judgment rather than removing humans entirely. Humans plus machines > machines alone, in many complex domains. (Think AI diagnostic tool + doctor, not AI doctor with no human oversight).

Success isn’t defined as “firing an entire department.” If your AI project’s ROI relies solely on eliminating jobs wholesale, you’re likely doing it wrong. Projects framed around massive headcount reduction tend to breed resistance and ethical issues, and often fail to capture the nuanced work that humans actually do. Instead, define ROI in terms of enhanced productivity, quality, or new capabilities, rather than just cost-cutting through layoffs.

Dear CEOs: Doing layoffs while claiming AI justifies it will breed AI resistance.

Productivity and value-add are the goals. The best AI use cases actually unlock new value or save significant time, rather than just doing the same work slightly cheaper. If an AI can do a task in 5 minutes that took a person 5 hours – that’s a win. If it can enable a new service or insight that wasn’t feasible before, even better. The ROI will follow from the new value created, not just from shaving a line item in the budget.

You’ve tried it manually (with AI) first. Before you invest $10 million in building a custom AI solution, test the concept with existing AI tools. Can a bright intern accomplish a similar outcome using off-the-shelf AI like ChatGPT or Claude? If yes, you have validation. If no, rethink. Far too many AI projects fail because nobody bothered to see if the idea actually works in practice at a small scale. Treat AI projects like science experiments: form a hypothesis, run a quick test with minimal investment (even if it’s a hacky solution), and observe results. Only then double down. Given the plethora of AI services available, there’s almost always a way to simulate the workflow manually to gauge feasibility. Use that to your advantage.

In short, bet on use cases where AI is a force multiplier for humans – where it’s “Humans with AI” rather than “Humans versus AI.” The companies that navigate this well will reap the benefits. Those that swing for fully autonomous solutions that magically replace dozens of employees in one go… well, they often end up as that statistic we opened with (the 95% that fail to launch). And here’s a pro-tip: if you can’t achieve a meaningful result with a baseline AI tool (like using ChatGPT manually), throwing tens of engineers and millions of dollars at a custom AI probably won’t fix that. Use the readily available tools as your canary in the coal mine. ChatGPT and Claude are, in this sense, leading indicators for your AI project – if they help you get a job done in prototype form, that’s a green light to invest further. If they don’t, maybe the idea isn’t ripe or realistic yet.

The One Superpower (AI Has, Humans Don’t)

Finally, let’s address a fascinating new capability that AI brings to the table – one that truly differs from what groups of humans can easily accomplish.

Namely: coordinated, tireless, multi-agent workflows all aligned to a single goal. (Try saying that at a family dinner.)

What do I mean? I mean, you can deploy an army of intelligent agents that specialize in different tasks, and have them work together towards one objective, without office politics, without coffee breaks, and without each agent asking “but what’s in it for me?” Other than perhaps pleasing their human user, these AI agents have no agenda or ego. They are, in a sense, the ultimate team players – because they’re all on the same team. Your team.

This is something new in the world. When you put a group of humans on a project, each person has their own motivations – career aspirations, pride, competition, genuine teamwork, or perhaps not. Humans are fabulous, messy creatures.

A group of AIs, however, can be spun up to instantly collaborate with a singular focus on the task. We’re already seeing the early versions of this: one AI agent can now delegate subtasks to another, then to another, forming a daisy chain of digital workers all coordinating to solve a complex problem (medium.com). It’s like having a personal swarm of experts who never sleep and never argue. (Unless you prompt them to role-play an argument, I suppose, but that’s on you.)

Imagine a future scenario: You, the human, say, “Hey AI, plan me a marketing campaign for our new product launch.” That request might spawn a cadre of AI agents – one generates market research, one drafts social media copy, one designs graphics, one crunches budget numbers – and they all feed results to a coordinator agent that compiles the final plan for you.

This isn’t sci-fi; prototypes of such multi-agent systems already exist, and they’re improving rapidly. I’m building one right now.

The real value here is speed and scalability. Need ten times the output? Spin up ten times the agents. Humans don’t scale that way. You can’t hire and train 100 new employees for a two-week project, but you can certainly instantiate 100 AI agents for a task and then shut them down when done.

Now, this isn’t to say AI agents can do everything perfectly (they absolutely can’t, at least not today). They will make mistakes, and they still need a human at the helm to guide and QA the overall process. But this capability – let’s call it massively parallel cognitive labor – is genuinely novel.

It means that workflows previously bottlenecked by human coordination can increase efficiency.

It’s like having Jarvis from Iron Man, but times a hundred, each handling a piece of the work, and all are unwaveringly obedient.

Humans, bless us, are not built to be 100% obedient or focused on someone else’s goal 24/7. We think about lunch, or our paycheck, or how Karen in accounting never says thank you. AI agents don’t grumble. They grind away at the problem set before them.

This is one area I advise keeping a sharp eye on. We’ve only scratched the surface of multi-agent AI workflows. Early experiments (AutoGPT et al.) were clunky, but they hint at what’s coming. The main complaint was latency. But if you’re augmenting the productivity of a human, turning an hour into days of accomplishment, latency is not a factor.

When this matures, it could supercharge productivity in ways we haven’t yet fully grasped. It’s not about replacing people; it’s about giving each person a battalion of tireless assistants. If I can delegate ten tasks to AI agents and have them coordinate among themselves to deliver a result by morning, that’s a game-changer.

For businesses that scale faster than hiring and onboarding an equivalent human team (and again, no hurt feelings when you turn them off afterward).

In sum, AI isn’t a zero-sum game between humans and machines. It’s a plus-sum game: humans with machines versus humans without machines.

The former will always outcompete the latter. We’ve seen this pattern from the steam engine to the spreadsheet. AI is just the latest, albeit most brain-like, tool we’ve created. Yes, most early projects will fail – as experiments must. Yes, there will be hype, bubbles, and disillusionment. And yes, jobs will change – some will go away, and new ones (we can’t even imagine yet) will emerge. But through all that sound and fury, the fundamental story is one of enablement.

AI enables us to do more, faster – sometimes astonishingly more – but it still relies on us to point it in the right direction.

So the next time someone declares, “AI is overhyped” because a pilot project failed, or proclaims, “AI will replace everyone” because a pilot succeeded, just gently remind them: “Post hoc ergo propter hoc” – kidding, kidding.

Remind them that we’ve been here before. The early failure of 95% of projects is not a death knell; it’s a familiar stepping stone. The wild predictions of total automation are not prophecies; they’re the echoes of past fears. The truth is, as it often is, more nuanced and a lot more interesting:

AI and humans will dance, awkwardly at first, then in sync. And those who learn the steps will thrive.