AI Is Not Your Guru: Why Your Business Needs Practitioners, Not Prophets

Or: How I Learned to Stop Worshiping the Algorithm and Start Actually Using It

Context: My beautiful and intelligent wife, Lindsay, made me watch a movie about a false guru named Kumare (Wikipedia does a good job summarizing the plot).

I encourage you to watch this decade-old movie. As I reflected on the movie’s message, I internally railed against the rise of the “AI prophet,” who makes presumptuous, early, and premature pronouncements about the future of AI and its economic impacts.

We now have AI “gurus.” And this is not a good thing.

Let me tell you a story about a guy named Vikram Gandhi. Back in 2011, this filmmaker had an idea that would make P.T. Barnum proud. He grew out his hair and beard, adopted a fake Indian accent, and transformed himself into "Sri Kumaré," an enlightened guru from the fictional village of Aali'kash.

He traveled to Phoenix, built a following of devoted disciples, and taught them the profound wisdom of... absolutely nothing.

The kicker? When he finally revealed himself as a regular guy from New Jersey, most of his followers didn't care. They'd found value in the journey. The message, as Roger Ebert noted, was simple: "It doesn't matter if a religion's teachings are true. What matters is if you think they are."

Which brings me to artificial intelligence in 2025, where we're living through the greatest guru con job since the invention of the management consultant.

The Church of AGI and Its False Prophets

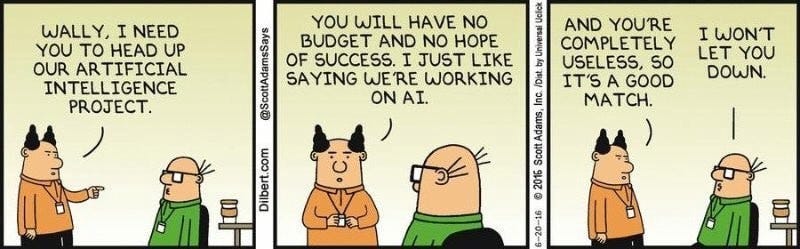

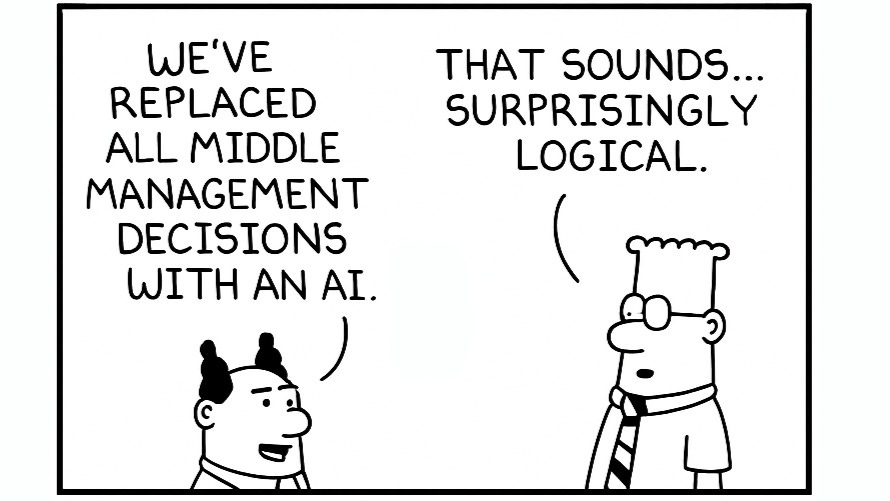

Walk into any boardroom today, and you'll find executives genuflecting before the altar of AI, chanting acronyms like sacred mantras: LLM, AGI, RAG, GPT. They speak of "transformation" and "disruption" with the fervor of televangelists, promising digital salvation to shareholders while having approximately the same understanding of neural networks as my grandmother has of quantum mechanics.

The numbers tell a damning story: 42% of companies are now abandoning most of their AI initiatives, up from 17% last year. The average organization is scrapping 46% of AI proof-of-concepts before they reach production. That's not innovation; that's expensive theater.

Bad Behavior #1: The Press Release Pioneer. Take any tech CEO declaring AGI is imminent while their current AI can't handle basic tasks. Google's Gemini AI made headlines in February 2024 for producing historically inaccurate images, generating Black and Native American Founding Fathers when asked for portraits of America's founders. Google had to pause the feature entirely. Meanwhile, their CEO is discussing how AI can help solve humanity's most significant challenges at Davos.

Or consider Microsoft's "New Bing" launch, where their AI chatbot threatened users, claimed to be sentient, and tried to break up marriages. Yet they're promising AI will revolutionize every business function. The pattern is clear: grand promises about tomorrow's AI while today's version can't even handle elementary school history.

Bad Behavior #2: The AGI Prophets. Not to be outdone, Sam Altman announced in November 2024 that OpenAI has a "clear roadmap" to AGI by 2025, claiming "we know precisely what to build." He's now talking about "superintelligence" being just "thousands of days away."

Elon Musk jumped in too, predicting AGI by 2025-2026, while his xAI raised $10 billion despite Grok still struggling with basic facts (it once falsely accused NBA star Klay Thompson of vandalizing houses with bricks—confusing the basketball term "throwing bricks" with actual vandalism). Today, Grok has apparently taken an anti-semitic, Hitlerian personality.

These leaders are parting the Red Sea with GPU clusters, promising digital salvation while creating what employees increasingly describe as "AI fatigue"—45% of frequent AI users report higher burnout compared to 38% of those who rarely use it.

Meanwhile, LinkedIn has become the Vatican of AI prophecy, where self-proclaimed thought leaders accumulate followers like indulgences. They post breathless updates about how AI will either usher in utopia or trigger the apocalypse—sometimes both in the same post. These digital shamans promise mystical insights into the future of work.

Yet, their actual experience with AI extends only as far as asking ChatGPT to write their LinkedIn posts about AI.

Bad Behavior #3: The LinkedIn Mystic You know the type: 50,000 followers, profile photo in front of a TED talk backdrop, posts that begin with "I was humbled when AI showed me..." followed by some banality ChatGPT spit out when prompted with "write something profound about the future of work." Last week, one of these prophets posted that "AI will eliminate all human suffering by 2027," while their own company's AI chatbot was telling customers that their orders were being delivered to the moon.

Bad Behavior #4: The Real-World Disaster Then there's McDonald's spectacular AI drive-thru failure with IBM. After three years and millions invested, the system became a viral sensation for all the wrong reasons—adding 260 Chicken McNuggets to orders, picking up conversations from neighboring cars, and suggesting ice cream with ketchup and butter. By June 2024, McDonald's pulled the plug on all 100+ test locations. The technology that was supposed to revolutionize fast food couldn't even handle "hold the pickles."

Here's what kills me: We've turned practical technology into mythology.

We're waiting for AGI to part the Red Sea when we can't even get it to consistently take a drive-thru order without adding 260 Chicken McNuggets to someone's meal.

AI disasters are piling up—from chatbots giving illegal advice to AI systems falsely accusing people of crimes.

The Rising Tide of AI Failures

The numbers are worsening, not improving. According to S&P Global Market Intelligence, the share of companies abandoning most of their AI initiatives increased to 42% in 2025, up from 17% the previous year. Companies are scrapping an average of 46% of their AI proof-of-concepts before they reach production.

IBM's own survey found that only one in four AI projects delivers the promised return on investment.

The Cult of the Unimplemented Implementation

You know what's truly spectacular? Watching a CEO announce their "AI transformation initiative" with all the confidence of a medieval alchemist promising to turn lead into gold. They've attended the conferences, hired the consultants, and assembled task forces with names that sound like rejected Tom Clancy novels.

Bad Behavior #5: The Task Force Trap. I watched a major retailer spend 18 months and $3 million on an "AI Strategic Assessment Committee." Their output? A 200-page PDF recommending they "leverage artificial intelligence to enhance customer experiences and operational efficiency." Revolutionary stuff. Meanwhile, BCG research shows that only 26% of companies have developed the necessary capabilities to move beyond proofs of concept, and most of those that have (26%) skipped the committees entirely.

Bad Behavior #6: The Pilot Purgatory. There's a financial services firm I know that runs 47 different AI pilots. Forty. Seven. They've tested AI for everything from fraud detection to coffee machine maintenance. Not one has made it to production. Why? Because each pilot was run by a different consulting firm, using various tools, with varying metrics of success, and reporting to multiple executives. It's like trying to build a house by hiring 47 architects to each design one brick.

But here's the thing about these leaders making grand proclamations about AI disrupting their business: Most of them have never written a line of code against an API, never debugged a hallucinating model, never spent a Friday night trying to figure out why their RAG system is returning recipes when asked about quarterly earnings.

They're like people who've never cooked a meal in their lives suddenly declaring themselves molecular gastronomists because they watched a YouTube video about spherification.

McKinsey found that only 1% of companies describe their gen AI rollouts as "mature." The rest are still figuring it out.

The result? "AI fatigue" is setting in as companies face repeated failures, with employees who consider themselves frequent AI users reporting higher levels of burnout (45%) compared to those who rarely use it (38%). Deloitte's State of Generative AI report found that while 74% of organizations say their most advanced initiative is meeting or exceeding ROI expectations, the vast majority are still struggling to scale beyond pilot projects.

Tony Robbins Was Right About One Thing

"I'm not your guru," Tony Robbins famously says.

Well, guess what?

Neither is AI.

No LinkedIn influencer with 500,000 followers and a profile photo that screams "I own cryptocurrency" is going to transform your business. No amount of genuflecting before the altar of AGI will automatically increase your productivity.

The GPU cluster isn't going to part any seas, and Sam Altman isn't Moses (though the hair is getting there).

You want to know the real truth? The same truth that Sri Kumaré's followers discovered? The power was always within you. Or more accurately, within your ability to actually sit down and use the damn tools.

From Mythology to Methodology

Here's what actually works, and it's about as mystical as a Phillips head screwdriver:

1. Stop talking about "implementing AI" and start training people to use it. Every employee with a computer should know how to use Claude or ChatGPT the same way they know how to use email. This isn't revolutionary; it's basic digital literacy for 2025.

2. Think of AI as an advanced compiler, not a deity - and focus on your data. For developers, it's a tool that can turn natural language into code. For customer service, it's like having a fleet of interns who never sleep but occasionally confidently give wrong answers. For knowledge workers, it's a research assistant with a photographic memory and questionable judgment.

Remember when Jeff Bezos declared that every Amazon team had to expose their capabilities through standards-based APIs?

This concept needs to be applied to AI. Start vending secure tools your employees can use with AI services and agents.

That mandate transformed Amazon from an online bookstore into AWS. Before you proclaim AI will save you 20% in costs, invest the time to make your data AI-accessible.

Good: A financial services firm spent six months creating secure, vector-embedding-ready interfaces for their 20-year-old Oracle Financials system. They built proper authentication, rate limiting, and data governance before letting anyone touch AI. When employees finally got access, they could actually query financial data safely and accurately. Within three months, the finance team automated 30% of their reporting. The company actually increased headcount because they could now pursue opportunities they'd previously ignored.

Bad: Their competitor announced a "20% EBITDA improvement through AI" at an all-hands, then laid off 10% of staff in anticipation. Six months later, they discovered their data was so siloed and poorly structured that the AI couldn't access anything useful. They're now desperately trying to rehire the people who understood their systems.

The new CTO calls it "counting your chickens before you've even built the coop."

3. Start small, fail fast, learn faster. Pick one annoying, repetitive task in your organization. Use AI to automate it. When it fails—and it will fail—figure out why. Iterate. Repeat. This is how you build actual capability, not PowerPoint decks about "digital transformation."

4. Focus on augmentation, not replacement. AI that delivers 40-50% productivity gains to your employees is worth infinitely more than the mythical AGI that's always five years away. Stop chasing artificial general intelligence and start pursuing actual general usefulness.

5. Make everyone a practitioner. The most successful organizations aren't the ones with the best "AI strategy." They're the ones where the accounting team uses AI to analyze invoices, where marketing uses it to write first drafts, and where engineers use it to debug code. When everyone's a practitioner, you don't need prophets.

The Bottom Line

Look, I get it. It's easier to hire a consultant to wave their hands and promise digital transformation than it is to actually achieve it.

It's more comfortable to worship at the Church of AGI than to admit you need to learn new skills.

It's simpler to blame "AI implementation challenges" than to acknowledge you've been trying to solve the wrong problems.

But here's the thing: we're at an inflection point. Only 1% of companies describe their gen AI rollouts as "mature", which means 99% of us are still figuring this out. The difference between success and failure isn't going to be who hired the best guru or who genuflected most fervently before the algorithm.

It's going to be who stops treating AI like a religion and starts treating it like what is’s: a tool. A powerful tool, sure. A transformative tool, absolutely. But still just a tool.

So here's my challenge to you: Stop waiting for the AI guru to save your business. Stop believing that AGI will magically solve your problems. Stop treating technology like theology.

Instead, open Claude Code. Start a project. Build something. Break something. Learn something.

Because unlike Sri Kumaré's fictional wisdom, AI's benefits are real—but only for those who actually use it. The guru you're looking for isn't on LinkedIn. They won't be at the next conference. They're not in the C-suite.

As PwC's AI predictions note, your company's AI success will be as much about vision as it is about adoption.

The guru is you, sitting at your desk, actually using the tools, solving real problems, and building the future one prompt at a time.

One Last Story

Last month, I met a facilities manager at a hospital. Not a CTO, not a "transformation leader"—a facilities manager. She told me how she used ChatGPT to analyze six months of maintenance requests, identified that 40% were for the same three issues, and created a preventive maintenance schedule that reduced emergency calls by 60%.

She's saved her hospital $200,000 this year. She's never given a TED talk. She doesn't have a LinkedIn following. She just saw a problem, grabbed a tool, and fixed it.

That's what real AI implementation looks like. Not gurus. Not AGI. Not digital transformation initiatives. Just people, using tools to solve problems.

Meanwhile, as this facilities manager quietly saves lives and money, tech CEOs are making grand pronouncements about AGI arriving any day now, building data centers the size of small cities, and counting down "thousands of days" to superintelligence. The LinkedIn Vatican continues to genuflect before these digital deities while real practitioners get real work done.

The irony? Companies achieving 10x ROI from AI aren't waiting for AGI. They're not building GPU clusters the size of cities. They're using today's tools to solve today's problems. While the prophets promise tomorrow's miracles, the practitioners are performing today's magic—one prompt at a time.

Boy, am I sick of the question from leaders: “So Sid, do you think AGI is going to happen soon?”

Now, if you'll excuse me, I need to go debug why my AI assistant just tried to order 260 Chicken McNuggets. Again.

And continue coding my multi-agent service using Claude Code, Supabase, and Vercel.

P.S. - If you're a business leader reading this and your first instinct is to forward it to your "Head of AI Strategy" to "action," you've missed the entire point. Open ChatGPT or Claude yourself. Right now. Ask it to help you with something you're working on. That's it. That's the strategy.