Hawking Edison Meet Claude!

When Silicon Valley Meets the Agora: A Discourse on Computational Rhetoric

I had a weekend journey of integrating Hawking Edison with the Model Control Protocol (MCP), enabling Claude to utilize the powerful simulation capabilities of the service. The results blew my mind!

First - Let’s Do a Short Demo….

I would like to tell you something about the ancient Greeks. They understood that democracy wasn't just about voting—it was about persuasion, about the ability to test ideas in the marketplace of human thought. They called it the agora. Today, we've built something that attempts to approximate that marketplace: an algorithmic model of human response that, while imperfect, offers something we've never had before.

The integration of Hawking Edison's simulation platform with Claude through the Model Context Protocol represents a notable development in our ongoing discussion about utilizing large language models as massive Monte Carlo machines. It's not quite the ability to read minds, but it's the next best thing:

A systematic way to anticipate how ideas might be received before sharing them with the world.

The Architecture of Understanding

Here's what we've built. The MCP protocol enables Claude to execute native function calls to Hawking Edison's API endpoints. When you invoke runSimulation, you're not sending a simple HTTP request—you're instantiating a complex multi-agent system that models human cognitive response patterns across defined demographic and psychographic parameters.

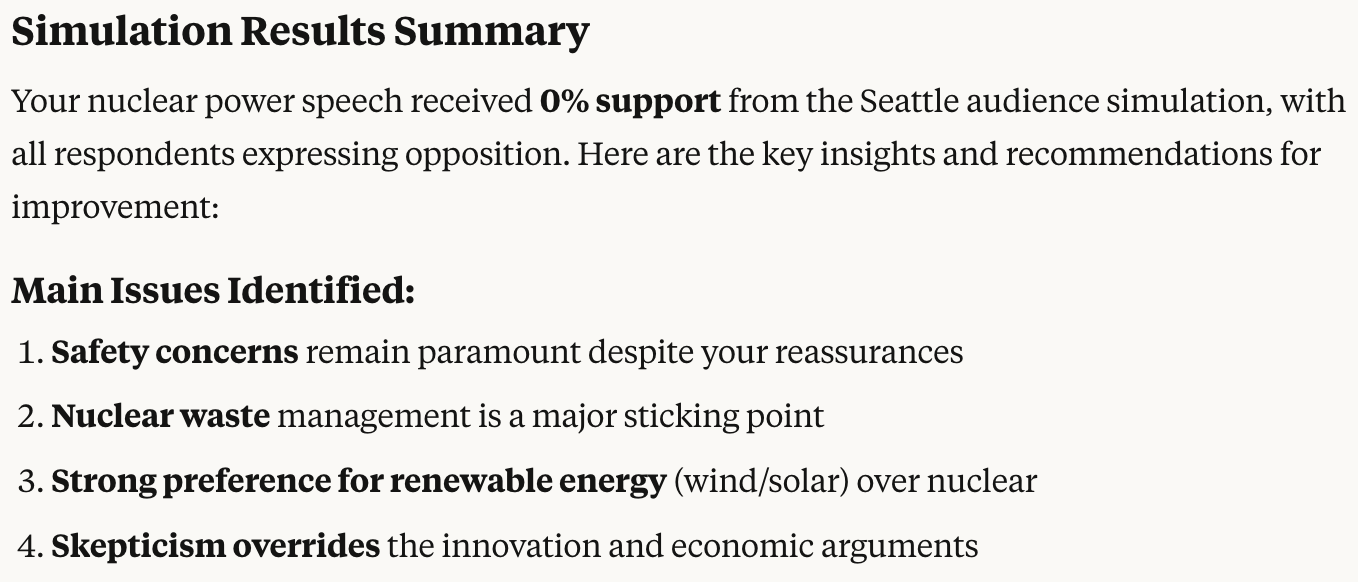

Consider this: I recently tested a message about nuclear energy infrastructure. The simulation spawned twenty distinct AI agents, each initialized with parameters drawn from a cohort representing Seattle's demographic distribution. Age, education, political affiliation, voting history, news consumption patterns—sixty-seven distinct attributes per agent, processed through transformer architectures trained on millions of human responses.

The result? Zero percent support. Not one simulated citizen found the message compelling. But here's where it gets interesting.

The Feedback Loop of Enlightenment

The getSimulationResults endpoint doesn't just return sentiment scores. It provides structured JSON containing response distributions, thematic analysis via latent semantic indexing, and individual agent responses generated through temperature-controlled sampling. Claude processes this data, identifies rhetorical failure points, and suggests modifications based on persuasion theory and the specific concerns surfaced by the simulation.

We revised the message. Changed "the solution is clear" to "let's explore every option." Acknowledged fears before presenting facts. Positioned nuclear as part of a portfolio, not a panacea. The same algorithmic citizens who rejected the first message? Forty percent found the revision worth considering.

That's not marketing. That's the scientific method applied to human communication.

Technical Specifications Worth Knowing

The runGeneticOptimization function implements an evolutionary algorithm with fascinating constraints. You provide seed messages—up to five initial variants. The system generates populations of 20 to 100 permutations per generation, each tested against your selected cohorts. Fitness functions can optimize for support rate, viral coefficient, authenticity scores, or persuasion metrics.

Here's what happens under the hood: Each generation undergoes mutation (synonym replacement, sentence restructuring) and crossover (combining practical elements from high-performing variants). Messages that violate your constraints—banned phrases, required elements, length parameters—are culled before evaluation. The fittest survive, breed, and evolve.

I've seen a climate change message evolve from 28% support to 73% over eight generations. The final version bore little resemblance to the original, yet preserved every core policy point. That's not focus-grouping—that's directed evolution of language itself.

The Survey Simulation Paradigm

The createSurvey and runSurveySimulation tools reveal a profound insight into synthetic data generation. When you define a survey with mixed question types—Likert scales, multiple choice, open-ended responses—the system doesn't merely randomize answers. It maintains response consistency within each agent, models survey fatigue, and generates open-ended responses through constrained language modeling.

I tested a five-question survey about urban transportation preferences across three distinct cohorts. The cross-tabulation revealed that suburban Republicans who "usually vote" showed 60% support for light rail expansion when framed as "reducing traffic congestion," but only 15% when framed as "environmental protection." That's not a guess—that's computational anthropology.

Why This Matters More Than You Think

We're standing at an inflection point. Not because we've built a better focus group, but because we've fundamentally changed the temporal dynamics of understanding human response. Traditional polling requires days and delivers aggregates. This system operates in seconds and provides distributions.

But here's what keeps me up at night: we've built a mirror that shows us how we're heard, not just what we've said. Every politician, every CEO, every activist now has access to a tool that reveals the delta between intention and reception. Used wisely, it could usher in an era of more thoughtful, more effective communication. Used carelessly, it could optimize manipulation to a science.

The ancient Greeks had a word: kairos. The supreme moment. The exact right time to say exactly the right thing. We've built a machine that finds kairos. The question isn't whether that's powerful—it's whether we're wise enough to use it well.

The code is elegant. The mathematics is sound. The implications are staggering. And it's running right now, in your conversation with Claude, waiting to show you how your words will land before you speak them.

That's not the future of communication. That's the present, if you're paying attention.