The Great AI Token Heist

Why Developers Are Burning Money on the Wrong Models

Most developers are hemorrhaging thousands on premium AI tokens for tasks a local model could handle better

The data is damning. A 100-developer organization burns through $518,400 annually on AI tokens when they could achieve the same results for $25,920. That's $432.50 per developer per month in pure waste—a 95% inefficiency rate that would make any CFO question their life choices. Yet here we are, watching developers feed Claude Opus and GPT-4 tokens into the furnace for tasks that require all the sophistication of a pocket calculator.

This isn't about being cheap. It's about being smart. The research reveals a fundamental misallocation of resources that's costing the industry billions while developers complain about AI limitations. They're using Formula 1 engines to drive to the corner store, then wondering why fuel costs are astronomical.

The conversation that started a revolution (or: How to tell your AI it's getting a junior developer)

Let me share a Sunday morning conversation that perfectly illustrates this madness. A developer—let's call him a "visionary with 96GB of RAM"—had an interesting chat with Claude Code. It went something like this:

Developer: "Would you benefit from having another LLM locally helping you generate code? Should you download and install a local LLM, wrap it with a utility, and have it generate code for this project?"

Claude Code (after some thoughtful analysis): "It could be beneficial for specific, well-defined tasks... The local LLM would be most valuable as a 'code generation assistant' for routine tasks rather than a decision-making partner."

Developer: "It would enable you to preserve token usage for complex, architectural tasks, and not having to downgrade to Sonnet. Or forcing me to re-login with an API key so you can keep using the Opus model."

Claude Code: "Ah, that's an excellent point! You're right - I currently spend tokens on writing boilerplate code, generating repetitive patterns, creating similar variations... A local LLM could handle these mechanical tasks, letting me focus tokens on architecture decisions, complex problem solving, security considerations..."

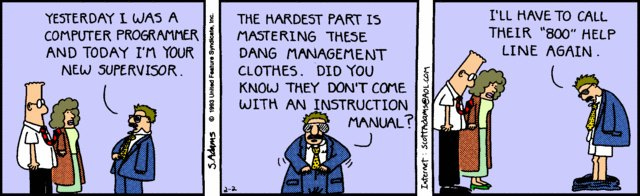

It's like watching a seasoned architect realize they don't need to personally measure every board. Claude Code essentially said, "You know what? I'd rather think about system design than write getter methods." The local LLM becomes the junior developer who handles the grunt work while the senior focuses on the problems that actually matter.

This conversation reveals something profound: even AI models understand the inefficiency of using premium intelligence for mundane tasks.

If Claude Code can grasp the economics of hierarchical AI workflows, why can't the humans paying the bills?

The $432 monthly waste that's bleeding companies dry

Let's start with the evidence. A typical CRUD operation requires 800 tokens. Using Claude Opus 4 at $75 per million output tokens, that's $0.060 per operation. The same task with GPT-4o mini costs $0.0006. That's a 100x price difference for identical output quality.

Here's the math that should terrify every technical leader:

Typical 100-Developer Organization:

Average AI usage: 50,000 tokens per developer per month

Premium model cost (Claude Opus): $432.50 per developer monthly

Efficient model cost (local + selective cloud): $21.60 per developer monthly

Annual waste per developer: $4,932

Total organizational waste: $493,200 annually

A medium-sized development team running 50 CRUD operations daily through premium models wastes $528.65 monthly, 98.5% of which is pure inefficiency. Scale that to enterprise levels, and we're talking about half-million-dollar mistakes that could fund entire additional development teams.

The tokenization inefficiency compounds the problem. Claude's tokenizer uses 30% more tokens than GPT's for Python code, creating a hidden tax on every operation.

Developers are paying a premium surcharge on top of already inflated prices.

Enter the local revolution: CodeLlama 70B changes everything

While developers burn money in the cloud, CodeLlama 70B sits ready to handle 85-90% of their daily coding tasks at zero marginal cost. The benchmarks are compelling:

HumanEval Score: 65.2% (competitive with GPT-3.5)

Boilerplate Generation: 35-50 tokens/second locally vs 25-35 for cloud GPT-4

Context Window: 100,000 tokens (far exceeding most cloud offerings)

Cost After Setup: $0 per token forever

The hardware investment—$3,000-8,000 for a capable setup—pays for itself in 3-6 months for active development teams.

Yet developers cling to expensive cloud models like security blankets, convinced local models can't handle "real" work.

The hierarchical workflow that changes everything

The solution isn't abandoning cloud AI—it's using it intelligently. Hierarchical AI workflows leverage the right model for the right task:

Local Models (CodeLlama via Ollama) Handle:

CRUD operations

Boilerplate generation