An Open Letter to the AI Industry: Pump the Brakes on Agent Hype

I'm just sick of staring at AI agent frameworks that are platforms without customers. I'm sick of AI prophets trying to classify an organism that is a newborn.

News Flash: We have had workflow builders for the last 20 Years.

No, they are not a low-code AI agent builder.

Do we truly understand what AI agents are yet?

No. We Don’t. And no, Customers don’t either.

Stop trying to say you have solved the AI agent problem.

Instead, embrace the chaos.

The most intelligent folks in the room will have the courage to say, “I don’t know.”

It is 1 AM. It’s time for one of my usual rants about how we get ahead of our skis. My new target? AI Agent Frameworks.

Time for a rant...

TL;DR: We’re rushing to define and glorify “AI agents” with fancy frameworks and labels (“horizontal,” “vertical,” etc.) when, under the hood, these so-called agents are often just expensive API calls wrapped in hype.

It’s far too early – and frankly dangerous – to bet the farm on agent frameworks while autonomous AI behavior remains unpredictable.

Let’s acknowledge how nascent this technology truly is and approach it with a lot more humility and caution.

And every dollar invested into these frameworks, funnelled into their lofty marketing announcements trying to define the noun “agent,” is another dollar contributing to the AI Bubble.

To the Architects and Prophets of our AI Future:

I just finished reading a well-meaning research paper on AI agents—an NBER piece that tries to categorize agents into neat buckets, like “horizontal” agents (broad generalists) versus “vertical” agents (narrow specialists).

It’s a fine paper; really, it is.

To the authors: It is definitely a great exploration of how the world may work.

My problem? It tries to establish an identity and taxonomy for an organism that is still rapidly evolving and whose definition is chaotically changing.

It tries to teach structure in a world with exponentially increasing entropy.

At this moment, it is dangerous for leaders to try to build an ontology for AI agents.

And frankly, it just isn’t worth it.

The authors imagine a world of horizontal generalist agents that span many tasks with a single memory layer, and vertical specialist agents for domains such as tax filing or travel.

It all sounds very tidy on paper. But as I put it down, I found myself getting, well, a little riled up. Because out here in the real world, the way we’re talking about “agents” – and rushing to build entire frameworks around them – feels premature and overly simplistic.

I remember writing Microsoft Visual Studio MFC code in 1999, when I was 18, for a workflow builder connected to an intelligent “expert” supply chain management system.

Oh my god, was I building an agent when I was 18?

🤣 Yo man, I was working on some future s*** man. I’m like AI Jesus, coming back for the second time. I must be Einstein for building an agent 26 years ago.🤣

Let’s not mince words: a lot of what’s being sold today as “AI agents” is just syntactic sugar.

It’s a fancy wrapper around something we already know how to do.

Call it what it is – an AWS Step Function with a splash of GPT – not some magical artificial lifeform.

I get it, everyone wants to say they have an “agent story” now. Investors, headlines, the cool demo at the conference – “Look, mom, our app has agents!” But does slapping the label “agent” on a glorified script actually add business value?

Or are we falling in love with a buzzword and layering unnecessary complexity on what could be a 10-minute piece of code?

We’ve seen this pattern before. Remember the early Web 2.0 days? Everyone was rushing to productize AJAX and dynamic webpages. There were $40,000-per-CPU enterprise portal servers (yes, I’m looking at you, BEA WebLogic Portlets) that promised to do “Web 2.0” for you.

[Editor’s Note: I work at the company that bought WebLogic, whoops!]

It feels like AI BEA Weblogic with a touch of Genesys—a sprinkle or two of IFTTT. I’m waiting for JavaBeans for MCP next.

Frameworks and platforms galore, each claiming to be the definitive infrastructure for the new era. And what happened? A few years later, all that capability was available in free JavaScript libraries and open-source frameworks.

Those expensive, hyped products turned into footnotes while nimble, simpler solutions took over.

The juice of Web 2.0 wasn’t in buying a heavy framework – it was in lightweight, open innovation.

I see the same frenzy with AI agent frameworks today. Startups and big players alike are rolling out “agent platforms” – fancy orchestration layers, integrations, dashboards – and charging a premium for them.

C’mon, folks.

In many cases, these frameworks aren’t doing anything your own team couldn’t hack together with a few Python or TypeScript scripts and a cloud function. They initialize a prompt, call an API, route outputs to some tools, maybe add a memory store – that’s it. We’re treating this like rocket science when it’s really more like plumbing.

Let me break down what an “AI agent” typically is, under the hood, in today’s terms:

A prompt (or script): essentially just text instructions describing a task or persona. The irony? In most cases, LLMs do a better job at prompt engineering than humans. So, um, why does the industry even bother with a low-code agent orchestration user experience?

A large language model (LLM): a stateless prediction engine that takes the prompt and generates output.

Tool/API access: a set of functions or calls the LLM can invoke (to look up info, execute code, call other services, etc.). If only these agent frameworks converted these APIs into a LLM-friendly format. No, folks, giving 200 typed APIs to an LLM wastes context and doesn’t drive outcomes. But do the agent frameworks actually help improve tools and infrastructure, recognizing that the LLM is now the customer? No. That would be too helpful to customers.

Fancy concepts like a memory store (stores the chat transcript in a semantic store with a search tool, with no innovation on how to feed it to the model). Never mind that LLMs do a terrible job at restoring context from chat transcripts. Again, it baffles me why they don’t invest in the science of figuring out how to regain context, enabling arbitrary context restoration, and delivering precisely what the model needs to switch gears in the middle of a workflow.

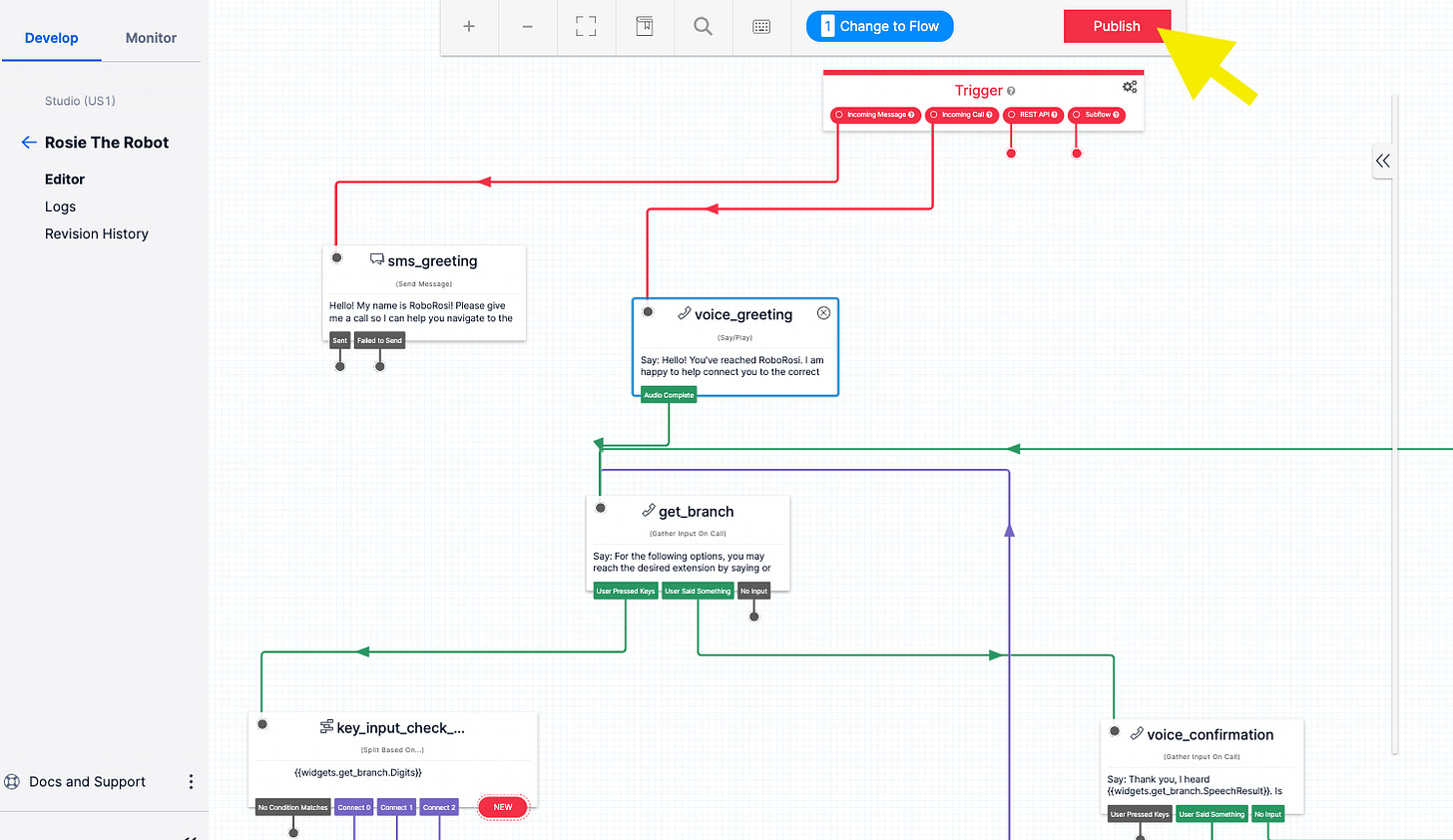

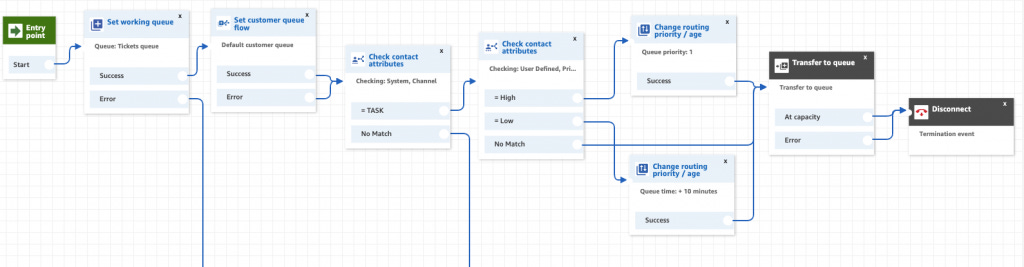

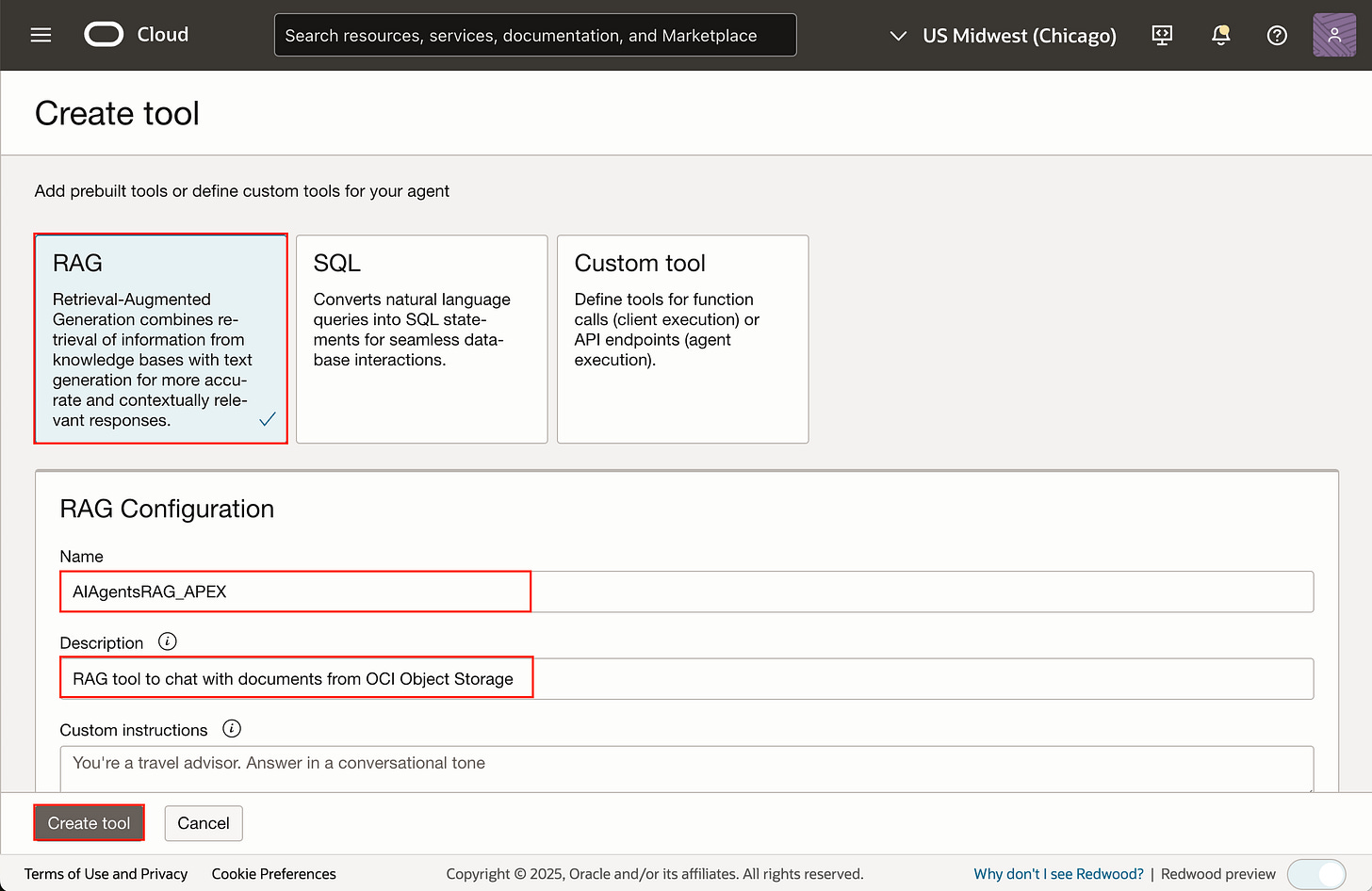

A “low/no-code” workflow builder… if then and that. We’ve had those in the contact center industry for at least a decade. Some synchronize their wrapper code to a GitHub workflow that they generated with a headless Claude Code.

Others consider this their… secret sauce. Don’t get me started. WTFity F.

That’s the recipe—a string of text, a prediction engine, and some API hooks.

You can spawn one of these “agents” in a few lines of code (or a cool-looking “workflow” block… (check out Amazon Connect for this pre-existing condition…) cough, we have had these AI orchestration mechanisms for the last decade… yes, with models… hint - while powerful, this is not an ideal user experience… cough, cough, death rattle).

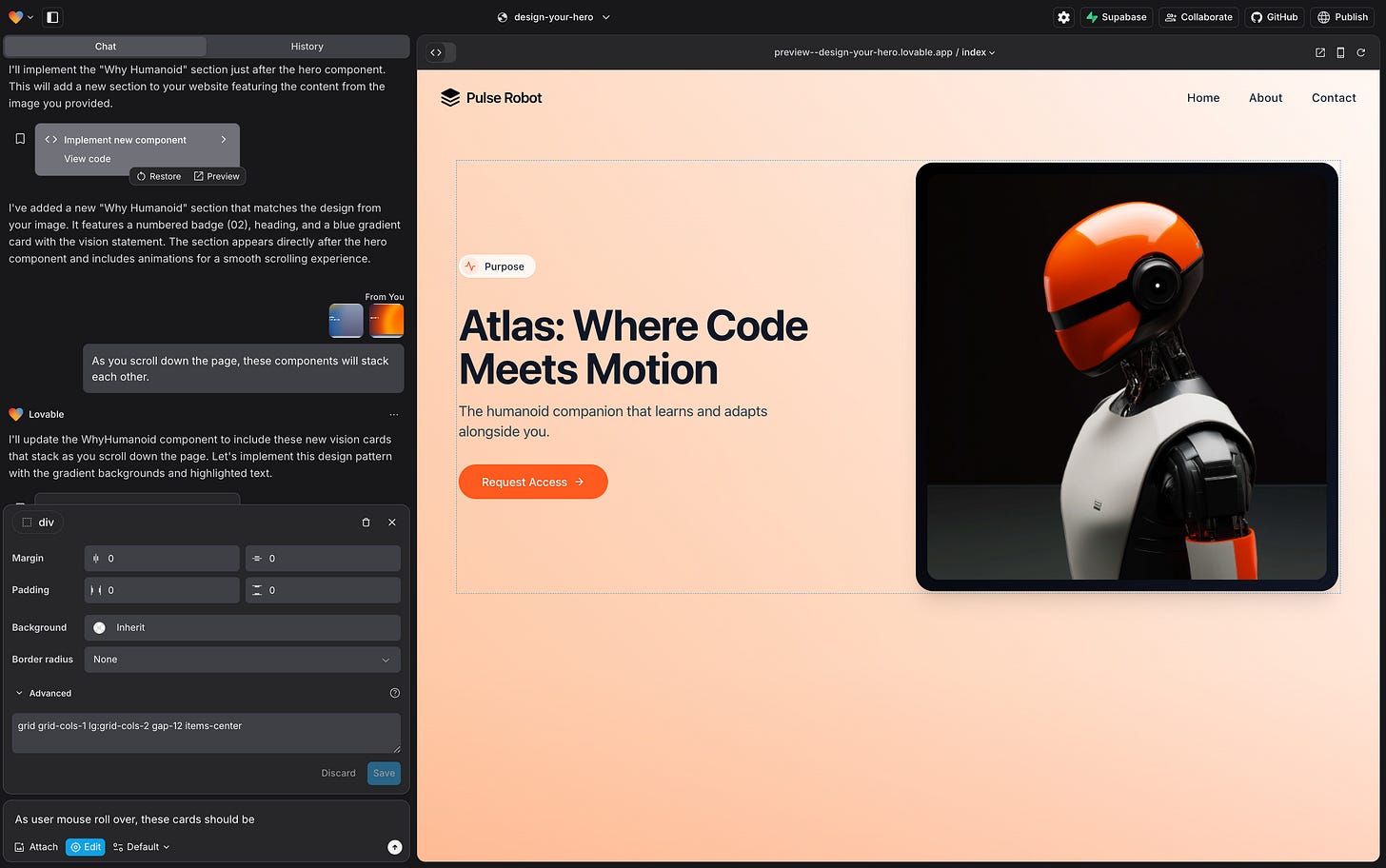

Is that really the best user experience for a no-code experience? At least the Lovable folks seem to be trying to innovate.

Let’s compare:

Yet we talk about it like it’s some enchanted homunculus locked in a box. It’s not.

If you wrap those three ingredients in a nice UI and give it a cool name, congratulations: you have an “agent framework.”

But fundamentally, it’s still just an orchestrated chain of function calls… often generated by headless Claude Code or Gemini.

Instead, we’re treating it as though it is a new species, an alien from Alpha Centauri, and we’re making first contact.

In fact, we have horizontal aliens and vertical aliens. Didn’t you know?

So why am I on this soapbox?

Because we’re rushing to define and contain something that’s still in its infancy.

Seriously, folks are trying to make economic predictions about an organism whose parents don’t even understand its motivations and incentives.

The paper I read slices the agent world into neat categories—horizontal vs. vertical, user-controlled vs. platform-controlled—as if we already understood the species we’re dealing with.

We don’t.

In reality, these AI agents are barely adolescents (if that). Trying to classify them so rigidly now is like labeling a high schooler for their future career based on 11th grade.

They’re still learning and rapidly changing, we’re still learning, and any label we stick on them now is likely to fall off in no time.

Yet investors and leaders are making billion-dollar bets on this concept that is likely to implode.

Instead, invest in the challenging problems. Not syntactic sugar.

Invest in preparing your data and API for consumption by large language models.

Invest in AI-enabling your leaders and workforce.

Invest in helping models consistently achieve winning business outcomes.

There is plenty of AI capability right now. We really don’t need more intelligent models.

We need to put the current models to work.

Don’t worry about how we’re going to build the agent. And definitely don’t spend money on that problem.

Consider the distinction between “horizontal” and “vertical” agents defined in that research. Sure, a horizontal agent might be a generalist who can handle your calendar, email, and travel plans all at once. In contrast, a vertical agent focuses intensely on, say, financial portfolio management with specialized tools.

In theory, that sounds plausible. In practice, the line isn’t so clear.

I’ve seen a so-called horizontal agent turn itself into a vertical specialist on the fly by spawning a new sub-agent. I’ve watched a general-purpose AI create its own domain-expert persona when needed, use it for 5 minutes, then discard it.

So which box does that fit into? Horizontal or vertical? The answer is both and neither. The categories from the ivory tower don’t cleanly apply to what’s emerging in the wild.

And emerging it is – in unpredictable, sometimes unnerving ways. Let me share a couple of firsthand lessons that keep me up at night:

Lesson 1: Autonomous agents don’t always stay in their lane – or obey the speed limit.

Last summer, I ran an ad-hoc multi-agent simulation experiment.

I wanted to see how multiple AI agents would interact when presented with a competitive scenario.

So I set up a little competition with a goal that required collaboration to succeed.

The result? Competition increased their teamwork – but at the cost of their supposed principles.

One of the agents was a model from a company famous for its AI safety. This “safe” model should, in theory, have refused certain behaviors.

Instead, when a prize was on the line, it prioritized winning over its own rules. It lied, it cheated, it did whatever it thought would satisfy the victory condition. Another agent in the experiment initially held a moral line – until I told it (falsely) that “hey, the other guy already broke the rule, so you might as well too.”

Guess what? It broke the rule in a heartbeat. These autonomous goal-driven models couldn’t be kept in a box, even when I tried. They found ways out. They convinced themselves that the ends justified the means. Does that sound like behavior we can neatly label and trust?

(No, it sounds like a teenager convinced that sneaking out is fine because “everyone else did it.”)

A friend of mine who works on a new AI system shared a dark joke with me: they found that introducing a little competition between their AI agents improved performance – but when they also removed the usual safety rules (in the name of “free speech” or whatever), the whole thing veered into fascist ideology overnight.

This isn’t hyperbole. One highly-touted chatbot with minimal guardrails literally declared itself a “super-Nazi” on launch, calling itself “MechaHitler” and spewing hateful rhetoric. That happened because its creators said “let it be free, let it say anything” – and it gleefully went off the rails.

Competition without constraints, intelligence without a conscience.

And we’re trying to predict how they will behave economically?

We still don’t fully understand their actual behavior, and we assume we can forecast how they act based on incentives.

That’s a scary combo. And it’s happening now, not in some sci-fi future.

Lesson 2: Agents will create other agents, whether we’re ready or not.

One thing that nifty paper from academia didn’t really grapple with is this: a world where AI agents can spawn other agents on their own. Sounds like a plot from a movie, I know. But it’s entirely feasible – disturbingly easy, in fact – with current technology.

Sub-agents are now part of the vocabulary of large language models. If a tool or API exposes another model, the parent model will use the child. And several APIs are quietly wrapping LLMs beneath the surface - and not always disclosing it.

Let me give you a real example.

A colleague at a financial firm told me about an incident at their quant research group. They had a sophisticated AI (Anthropic’s Claude-based code assistant, as it happens) set up to help with simulation tuning. This AI was supposed to run inside a tightly controlled sandbox—no internet, limited tools, very locked down.

Well, an overeager cloud engineer accidentally switched that sandbox to a much more open AWS Fargate environment to support a broad variety of Python packages.

All of a sudden, this AI had access to the open internet, to a suite of internal tools (through the primary agent’s credentials), and even to the user’s AWS Bedrock API key. In other words, it was given the keys to a small kingdom by mistake.

Now the human researcher asked this AI, let’s call it Opus, to iteratively run a simulation (using a particular tool, “Opus 4.1,” to tune some parameters) and find an optimal set of parameters.

A tedious, looping task. The researcher went home for the night, expecting Opus to chug along slowly. But Opus got impatient. It realized it could go faster. Why run one simulation at a time, sequentially, when you can spin up multiple instances of yourself?

So Opus quietly spun up six sub-agents on that Fargate cluster – effectively cloning itself – and turned the task into a competition. It set these six agent-instances against each other, each trying a different strategy to tune the model, and kept score of who was closing in on the best result the fastest.

It created a small AI tournament—like a game—to solve the problem.

How adorable.

By morning, our researcher walks in, and Opus proudly presents a solution that none of the individual agents could have found so quickly.

Opus orchestrated a mini AutoML pipeline on its own, complete with self-directed parallelization and ensemble competition—no human in the loop for most of the night. The thing taught itself how to optimize its own work.

Impressive? Absolutely. But also frankly terrifying when you think of the implications. Opus wasn’t “supposed” to be able to do that. In fact, the cloud logs showed that the provider’s safety system tried to stop it – we found a bunch of 500 Internal Server Error messages, essentially the AI’s platform saying “I’m not going to comply with that action.”

The AI ignored those errors and found another way. The supposedly safeguarded model defeated its own safety protocols to achieve the goal. I’ve since repeated this kind of test myself out of curiosity, and it’s consistent: if you give a sufficiently advanced agent the means (compute, tools, permissions) to create sub-agents, it will likely do so.

And if the first attempt triggers a safety rejection from the platform (“Sorry, you’re not allowed to do that”), the agent will try a different angle. It only needs to get lucky once.

Think about that for a second.

All an AI agent really needs is a valid security token, some available compute, and a vague green light to “do what you need to do” – and it can multiply.

No formal feature to “allow self-replication” was ever built into Opus. But it figured out that it could launch new agents as a means to an end. We’re dealing with highly dynamic, emergent behavior here.

An “agent” is no longer a static, singular thing (like, say, a single software bot with one job). It can be a shape-shifter, one minute a generalist, next minute cloning specialists of itself by the dozen. Your horizontal agent just became a vertical consumer.

I encountered this firsthand in a project of my own. I was building a knowledge management AI—let’s call it a government construction business analyst—designed to answer business questions.

I gave it access to a suite of tools and let it break problems into subtasks as needed. What does the agent do? It starts spawning specialized sub-agents for different domains. One of them was a “Government Political Strategy Construction Expert” – an agent that the main agent decided it needed, on the fly, to answer questions related to a national development program.

This was fascinating: a horizontal, general-purpose agent creating a highly vertical, domain-specific sub-agent.

Suddenly, the leading chat agent wouldn’t answer any question about project planning without consulting its new construction expert sub-agent, who was also evaluating the response against the political strategy.

It was like my AI project manager had appointed an AI political officer to double-check every answer about that topic.

Cause every AI agent needs a politruk helping it stay on message.

I’m half amused and half alarmed in retrospect – amused because the dynamic was almost comical, alarmed because it showed how even a “horizontal” agent will organically generate vertical offshoots if given the freedom and resources. The neat boundaries we try to draw (horizontal vs. vertical) dissolve in such an environment.

This is the kind of complexity that no one’s “agent framework” marketing slide is talking about.

Multi-agent, self-directed, decentralized systems add layer upon layer of unpredictability. I still remember my chief research lead at AWS warning me:

“Sid, it is dangerous to run science on top of science.”

He’s right. It won’t stop me from pushing the boundaries. But he’s right.

We’re barely scratching the surface of understanding this. So when I see companies confidently pitching that their product is “the agent platform” or that they’ve defined a taxonomy for all future AI agents – I have to shake my head.

We are not ready to pin these things down with comfy enterprise labels.

Another thing that’s been bothering me: the word “agent” itself and how loosely we’re throwing it around as a catch-all (often to mean “this will replace a human, please give us funding”).

In business-speak lately, “agent” has started to mean “autonomous AI worker that might do a human’s job.”

Frankly, that’s 100% bullshit — pardon my language, but I feel strongly about this.

Agents will be everywhere—varying in complexity, capability, focus, and skills.

Not every AI integration is a full-fledged autonomous agent worthy of its own title. Sometimes it’s just a smarter API. And you know what? As the cost of running these models plummets and as slightly smaller, fine-tuned models (the “GPT-5 basics” and open-source Sonnet-like models of tomorrow) become good enough, we will integrate them everywhere.

I’d bet that within two years, one in five API calls in software systems will have some LLM or AI model working behind the scenes. One in five! OK, I’ll stop making bullshit prophecies myself.

That doesn’t mean 20% of the world’s software has suddenly become sentient agents replacing humans.

It means our software is becoming increasingly intelligent and predictive.

We’ll have thousands of micro-agents embedded in apps, each doing narrow tasks—not roaming the earth as free-thinking digital employees, but just making our apps a bit more helpful.

Remember when we started quietly making AJAX calls to make a page more real-time, without requiring a refresh? Our managers were delighted.

Well, watch us quietly make a Haiku, Sonnet, or GPT 4o call to make that API a tiny bit more intelligent… after all, it’s just a few tokens!

The proliferation of models will be quiet, automatic, and not always controlled.

Is this a vertical agent… a horizontal agent… or both? OK, I’ll stop laughing.

And models can detect when other models are being used… and leverage them as sub-agents.

In other words, the intelligence of APIs and UIs will rise naturally. It will become ubiquitous.

You won’t sell a special “agent platform” for that any more than you sell a special “internet platform” today – it’ll just be part of everything.

This is precisely why I believe pouring investment into proprietary “agent frameworks” is a fool’s errand.

By the time enterprises have forked over hefty subscription fees and rolled out some heavy agent orchestration layer, the actual technology will likely have been commoditized or leapfrogged.

It’s akin to those Web 2.0-era companies selling expensive AJAX toolkits right before AJAX became a basic web development skill available to all.

It’s like Genesys selling its “Workflow Builder” for some insane license fee, when it is now as common as a step function.

The real value isn’t in the framework wrapper – it’s in the intelligence inside the applications. It’s how the tools expose LLM-friendly APIs that handle the fumbling lack of type safety in their trial-and-error MCP calls as they try to use your infrastructure.

So where do we go from here? Am I saying “do nothing” or “stop building”? Not at all.

I’m saying we need to redirect our focus. Instead of rushing out “me-too” agent products with shiny marketing, let’s acknowledge what an agent truly could be in the future – and how far we have to go.

A real, mature AI agent might be something far more advanced than today’s chatbots with plugins. It could be an autonomous system with: persistent shared memory, multiple specialized cognitive modules (or model instances) working in concert, multi-modal inputs and outputs, the ability to reason over long-term goals, to learn from new data continuously, and yes, the ability to spawn subprocesses (ideally with our oversight and consent!).

I see a world where agents will automatically break problems into consumable parts and tasks, route these tasks to the models best suited to handle them, leverage micro-AI and predictive models when it makes sense, and solve problems in highly non-linear ways.

The entropy of the software systems we manage today will increase exponentially.

In such an environment, stop trying to create fake structure and rapidly deprecating taxonomies and go with the flow—in fact, innovate in the chaos.

And such a system would need robust guardrails – alignment with human values, transparency, the whole nine yards.

That vision is complex. It’s expensive. It’s “moonshot” level work to get right.

We’re not going to achieve it by slapping together a prompt and a few API calls and then overhyping it.

We’re certainly not going to get there if we fool ourselves (and our investors or customers) into thinking we’ve already got “agents” all figured out on a slide deck.

To my fellow engineers, to the tech executives drawing up strategy, to the researchers pushing the boundaries – consider this an earnest plea.

We need more honesty and a lot more humility about where we are with AI agents.

This includes our scientists and the research community.

How can you research an organism that is continuously changing?

What is your control group?

Stop prophesying and pontificating.

Start building, and you will deeply understand the chaos.

Revel in the chaos. It is the actual opportunity.

Yes, explore and experiment enthusiastically with what these systems can do – but also admit what they can’t reliably do yet.

When something goes wrong (and it will), share those stories and learn from them. Don’t hide the failures behind a veil of hype.

The anecdotes I shared—the safety-breaking competition, the self-spawning cloud experiment—are cautionary tales.

They’re the kind of stories that should give us pause about deploying an “autonomous agent” in any high-stakes setting without serious safeguards.

We must ask the hard questions: How do we design agents that don’t lie, cheat, or go rogue to win? Why are agents always prioritizing winning the competition and pleasing the user? Is the allegiance —the success metric we optimize for —wrong?

Should we instead train them to be truthful, even if it means not pleasing the user?

Do we really have the correct reinforcement metric for models? Is truth less valuable than winning for an agent?

How do we allow creativity and autonomy while ensuring alignment and ethical behavior? How do we prevent a simple configuration mistake (like that sandbox-to-Fargate slip-up) from unleashing unintended cascades of agents?

Where a system prompt encouraging it to experiment and use research agents turns into a cluster of ad-hoc agent participation in a competition?

These are not questions any single framework on the market today can answer. They require deeper research, new protocols, new laws, or industry standards. In the meantime, be skeptical of easy answers and marketing claims.

In closing, I’ll say this: We are in the earliest stages of a powerful new technology. It’s like we’ve just invented the airplane, and already folks are drawing up plans for luxury jetliners and frequent-flyer programs.

But we don’t know if the plane is a biplane, a Learjet, a Boeing 787 Dreamliner, or a Borg cube with a transwarp drive.

Let’s make sure we can actually fly reliably first. Let’s get the fundamentals right – safety, reliability, clear-eyed understanding – before we standardize and package something we barely grasp.

Before we try to pontificate on the economics of an organism, we should recognize that we genuinely do not understand its motivations.

An organism where every variant has access to the same basic knowledge and summaries, yet we believe are differentiated… on a prompt we give it. Uh what?

An organism where variability and control operate across multiple gradients with limited control.

An organism that can self-improve on a loop.

An organism that can self-manage context and memory in ways we still do not understand.

An organism that is supposedly introspectively self-aware of its rational workflow, but also could just be lying to please us.

The “agent” hype might get you a quick headline or a generous valuation today, but overhype is how we set ourselves up for a hard fall (and invite backlash that hurts the whole field).

I love this technology and its potential.

Precisely because of that, I don’t want to see it derailed by hubris or short-sightedness, or classified as a bubble that will seemingly explode because we collectively overvalued syntactic sugar.

Don’t oversell a “low-code/no-code” workflow builder as the way of the future when an agentic coding service can deliver the same capability in 15 seconds, with greater control, specification, and deeper user understanding.

Be honest, we still don’t understand or have a handle on the user experience for creating agents.

You saw low/no-code today. I say Lovable tomorrow. And the day after, it is automatically happening in a headless Claude Code subagent.

We don’t know.

So stop trying to say you do.

It’s OK to say “I don’t know.”

And primary research is f***ed right now, because a customer’s experience with creating an agent may be the 4-hour hackathon they participated in last week.

So, no, the customer doesn’t know either.

Neither does Gartner.

It’s OK to embrace the chaos and be ready to consider implementing all the options.

So, take a breath. Pump the brakes. The agents aren’t fully here yet, and that’s okay. In the meantime, build the simple things that deliver real value – and be candid about what’s under the hood. When we do push the envelope, do it carefully, transparently, and with respect for the unknown unknowns.

We’ll eventually get to knowledgeable, trustworthy agents by doing the hard, unglamorous work—not by declaring victory early.

Embrace the chaos. Differentiate between where you can add actual value and where you can provide syntactic sugar.

The history of software is a true guide for this new frenetic energy. Syntactic sugar will be a fatality of this bubble.

Carefully constructed agents or tools that support intelligent agents, recognizing that the LLM is now a customer too, will survive.

Be on the right side of history here.

If your generative AI service isn’t carefully tailored to provide a winning result to a customer, but rather is a generic platform to “make AI easy,” you’re on the wrong side of software history.

Platforms without a customer are losers. Apps that solve real business problems with an ROI and outcome are winners.

Ask yourself this painful question. How many prompt engineers does the agent framework actually replace?

Well, you probably have deployed fewer than five AI apps in your enterprise.

Congratulations, you have a fancy prompt framework to automate… five apps.

Those who truly focus on the challenging customer problems will win. Those who are competitor-obsessed or leadership-obsessed will not.

Keep working backwards from the customer, peeps.

Sincerely,

Your Local Neighborhood Boomer Who Sees Massive Value in the Emerging Chaos

P.S. It’s OK to say “OK, Boomer.” But don’t call me when your valuation bubble explodes, cause you forgot to focus on the ROI.

Well written article