Claude Code: The Garage Band Revolution for Software Development is Coming

The great code revolution isn't coming—it's already here, and you're late to the party

I promised to write a Substack on my experiences with Claude Code.

If you haven’t been following along, I have been building a service to help create better messaging (it is poorly named after the middle names of my two dogs, Hawking Edison, link to demo).

I have had a chance to internalize Claude Code, and I hope you enjoy my conclusion.

Listen up. I need you to understand something, and I need you to understand it right now.

The era of the software engineer as a solitary code craftsman, hunched over a keyboard, translating business logic into elegant algorithms through sheer force of will and caffeine—that era is over. Dead. Kaput. And if you're still clinging to that romantic notion, you're about as relevant as a COBOL programmer at a React conference.

But here's the thing—and this is where it gets interesting—the death of that era doesn't mean the death of the software engineer, far from it. What we're witnessing is nothing less than the most profound transformation of the engineering discipline since Grace Hopper pulled that moth out of the Mark II.

We're not just changing tools; we're changing the fundamental nature of what it means to be an engineer.

The GarageBand revolution: A preview of what's coming

I would like to tell you a story about disruption. It's 2004, and Apple releases GarageBand. Suddenly, every teenager with a Mac can produce music that would have required a $100,000 studio just five years earlier. The music industry scoffs. "Real musicians use Pro Tools," they say. "You need years of training to produce quality music," they insist. "This is just a toy," they declare.

Fast forward to today. GarageBand has been installed on over 1 billion devices worldwide. Billie Eilish recorded her Grammy-winning album "When We All Fall Asleep, Where Do We Go?" in her brother's bedroom using Logic Pro—GarageBand's big sibling. Lil Nas X created "Old Town Road"—the longest-running number-one single in Billboard history—for $30. Steve Lacy produced tracks for Kendrick Lamar's Grammy-winning "DAMN" album entirely on his iPhone using GarageBand. That iPhone? It's now in the Smithsonian.

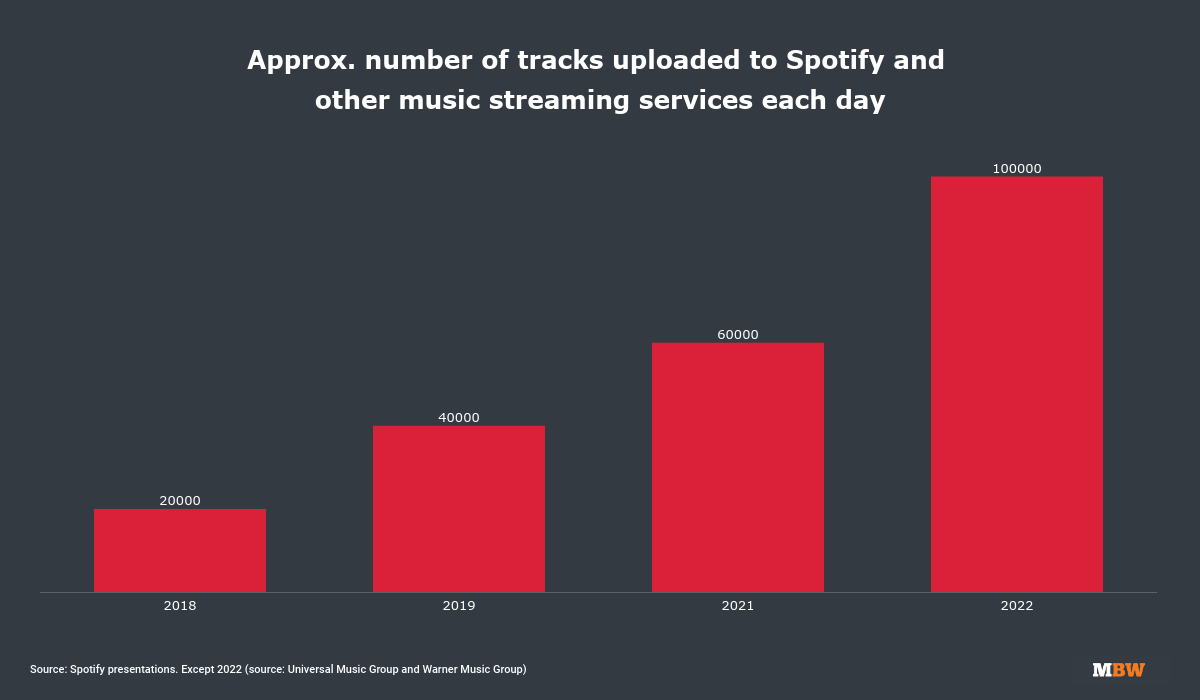

The numbers are staggering. The democratization of music production has driven independent artists from a niche segment to command 46.7% of the global recorded music market, worth $14.3 billion in 2023. Production costs plummeted by 99.5%. Where the entire year of 1989 saw fewer tracks released than now appear daily on streaming platforms, we now see 100,000 new tracks uploaded to Spotify every single day. That's 36.5 million tracks per year.

Professional recording studios? They've collapsed.

Studio employment fell 42.9% between 2007 and 2016.

Of London's 300 recording studios, most have closed or converted to tourist attractions. The survivors adapted by offering what bedroom producers can't—orchestral recording spaces, vintage equipment, and specialized expertise.

Now, pay attention, because this is precisely what's about to happen to software development.

The coming application explosion.

When the barriers to music production fell, we didn't get fewer musicians—we got more music than anyone knew what to do with. When the barriers to software development fall—and they're falling right now—we won't get fewer applications.

We'll get an explosion that makes the App Store boom look like a gentle breeze.

Think about it. Currently, if your accounting department needs a custom workflow tool, they submit a ticket, wait six months, and receive something that sort of works.

Tomorrow? The head of accounts receivable will describe what they need to Claude Code, iterate through a few versions, and deploy it by lunch. Not because they became programmers, but because programming became accessible.

This isn't speculation. This is extrapolation. When GarageBand launched, nobody predicted that bedroom producers would dominate the Billboard charts. But that's precisely what happened. When every department, every team, every motivated individual can create software, we'll see solutions to problems we didn't even know existed.

Distribution is the new development.

But here's where it gets interesting—and where most people miss the plot entirely. In the GarageBand era, making music became trivial. Getting heard became everything. Spotify doesn't care if you recorded in Abbey Road or your basement. They care if people listen.

Today, only 20% of uploaded tracks ever get more than 1,000 streams. The top 1% of artists capture 90% of streaming revenues.

The same transformation is coming to software. When anyone can build an application, building isn't the differentiator. Distribution is. Network effects are. Brand is. User trust is. Community is.

Let me be crystal clear about what this means: Your junior developers need to start thinking like product managers. Your senior developers need to start thinking like CEOs. Because in a world where code generation is commoditized, the only sustainable advantage is understanding and serving your users better than anyone else.

This isn't some future state. This is happening right now. The most successful AI-assisted development teams aren't the ones writing the best prompts. They're the ones who understand their users deeply, who build distribution into their development process, who create network effects from day one.

The great moat erosion.

Now, let me deliver some hard truths that'll make certain people very uncomfortable.

Every technical moat you've built is eroding. Fast.

That proprietary COBOL system that only three people in your company understand? Claude Code can read it, understand it, and translate it to modern languages faster than your experts can explain why it's complicated. That complex SIP/WebRTC implementation that took your team two years to master? AI can generate a working implementation in a matter of hours.

"But wait," you say, "what about our sophisticated ML models? Surely AI can't replicate our data science expertise?"

Wrong. AI systems are already generating, training, and deploying machine learning (ML) models autonomously. They're doing hyperparameter optimization, feature engineering, and even architectural search. That PyTorch expertise you spent years developing? It's being commoditized as we speak.

Here's what this means: If your value proposition is "we know how to do technically complex things," you're in trouble. Deep trouble. Because technical complexity is precisely what AI eats for breakfast. It’s just generation on steroids. Consider the fact that models are becoming increasingly proficient at translation. Well, technical production from requirements is yet another form of translation.

If you’re a “people person” who “translates” for a living, this is not a moat.

The new competitive advantages

So what remains? What moats survive the flood? Let me tell you what actually matters in the post-GarageBand era of software development:

Domain expertise that goes beyond code. Understanding not just how to implement a solution, but which solution actually solves the business problem. AI can code, but it can't sit in your customer meetings and read the room.

Distribution networks and user relationships. Just like the best musicians aren't necessarily the most technically proficient, the best software won't necessarily be the most elegantly coded. It'll be the software that reaches users, solves their problems, and keeps them coming back.

Brand trust and reputation. When anyone can spin up a CRM system, why do people pay for Salesforce? Brand. Trust. Ecosystem. Community. These aren't technical advantages—they're human advantages.

Speed of iteration and learning. In the music industry, the winners aren't the perfectionists laboring over their magnum opus. They're the ones consistently releasing content, learning from feedback, and building their audiences. The same principle applies here.

Integration and ecosystem thinking. The valuable software won't be a standalone application. It'll be the glue that connects everything else. It'll be the platforms that others build upon. It'll be the standards that others adopt.

My startup friends will argue that this fact has always been the case. Old news, they will mutter under their breath. However, the number of EBCs I attended at AWS, where large enterprises discussed legacy applications, suggests that >95% of the industry has not undergone this evolution. Especially the IT industry.

The bottom-up transformation

Here's what really keeps me up at night—and what should keep you up too. This transformation isn't happening top-down. It's not CIOs making strategic decisions. It's happening bottom-up, right now, in every corner of every organization.

That marketing analyst who's tired of waiting for IT to build her a dashboard? She's already using Claude to generate Python scripts. That sales ops manager who needs better Salesforce integration? He's prompting Cursor to build custom connectors. That customer success team lead who wants better reporting? She's deploying AI-generated solutions without even telling IT.

This is the GarageBand effect in action. The tools are so accessible, immediate, and empowering that people simply start using them. By the time the organization realizes what's happening, it's not a question of whether to adopt AI development—it's a question of how to govern what's already happening.

Implications for every level

If you're a junior developer, you're no longer competing with other junior developers. You're competing with every motivated person in your organization who can clearly articulate what they want.

Your advantage isn't syntax knowledge—it's understanding systems, architecture, and integration.

If you're a senior developer, your job isn't to write the best code. It's about designing the best systems, creating frameworks that AI and citizen developers can safely build within, and being the conductor who ensures all these newly empowered musicians play in harmony.

You’re also ensuring that the software supply chain is sane, coherent, and trustworthy. It’s about providing a secure MCP server for the critical customer data in your warehouse. It’s about ensuring that pipelines have policies that only allow secure dependencies to be imported by AI agents.

If you're a development manager, you're not managing a team of coders. You're managing an ecosystem of builders, some human, some AI, some hybrid. Your job is to create the governance, the guardrails, the quality gates that let a thousand flowers bloom without creating a garden of weeds.

If you're a CTO, you need to fundamentally rethink your technology strategy. Your competitive advantage isn't in having the best developers or the most sophisticated tech stack. It's in having the best platform for rapid, safe, distributed development. It's in enabling your entire organization to build solutions while maintaining security, quality, and coherence.

The soundtrack to disruption

Let me bring this back to music one more time. When GarageBand democratized music production, the professional musicians who thrived weren't the ones who complained about declining standards. They were the ones who recognized that in a world of infinite music, curation becomes valuable.

Performance becomes valuable. Connection becomes valuable. Story becomes valuable.

The same principle applies here. In a world where anyone can generate code, the valuable developers won't be the ones who can write the cleverest algorithms. They'll be the ones who can:

Understand what actually needs to be built

Design systems that scale and integrate

Create platforms others can build upon

Build communities around their solutions

Tell the story of why their software matters

Connect with users at a human level

Move fast without breaking things

Learn and iterate relentlessly

The GarageBand revolution didn't kill music. It killed the gatekeepers. It killed the artificial scarcity. It killed the "you must be this technical to participate" sign at the door.

I have seen Claude Code fail miserably at scalable system design and distributed systems patterns. I have not seen Claude fail at data structures and algorithms. The point? It has become more about patterns of scale and integration versus semantics and implementation.

The same thing is happening to software development. Right now. Today. And just like with music, the people who'll thrive aren't the ones protecting the old order. They're the ones who embrace the chaos, ride the wave, and build the future.

The emergence of the software development manager—now more product-focused than ever

Let me paint you a picture. It's 2025, and at this very moment, 82% of developers are using AI coding assistants daily. Not occasionally. Not experimentally. Daily. One CTO—and I have this on good authority—recently reported that 90% of their codebase is now generated by AI. Ninety percent! That's up from 10-15% just twelve months ago.

If that doesn't make you sit up straight and pay attention, then I don't know what will. Before you yell “AI SLOP” at the top of your lungs, think about the fact that you sound a lot like a music producer from the 80s who is about to lose their shirt.

But here's where most people get it wrong. They think this is about replacement. They think it's about obsolescence.

They believe the machines are coming for their jobs, and they'd better learn to drive a truck or sell insurance.

Wrong, wrong, spectacularly wrong.

What's happening is far more interesting, far more complex, and—if you're smart about it—far more opportunity-rich than simple replacement. We're witnessing the birth of a new role: the Agentic Software Development Manager. Not a people manager, mind you, but a manager of intelligent systems. A conductor of an AI orchestra. A Charlie Bell for the age of artificial intelligence.

Charlie Bell and the philosophy of operational excellence

Now, I would like to tell you about Charlie Bell. If you don't know who Charlie Bell is, please rectify that ignorance as soon as possible. This is a man who spent 23 years at Amazon, where he built AWS from the ground up, and now leads Microsoft's entire security organization with 34,000 engineers under his command. This is not a man who thinks small.

Bell's philosophy—and pay attention because this is crucial—isn't about writing perfect code. It's about building perfect systems. Systems that are reliable, scalable, and most importantly, continuously improving. He pioneered the concept of single-threaded teams at AWS. You know what that means? It means one team owns one service, forever. Not until the project ships. Not until the next reorg. Forever.

"If you really want to do something that matters," Bell once said, "then you're going to put a single-threaded team on it."

Think about that. Really think about it. In a world where AI can generate code faster than any human, what matters isn't the code—it's the ownership, the continuous improvement, the relentless focus on operational excellence.

Bell's weekly operations reviews at AWS weren't code reviews. They were system reviews. Service health, incident analysis, operational readiness—these were the metrics that mattered. And here's the kicker: this is exactly the model we need for AI-generated code.

Not "did the AI write good code?" but "is the system healthy, reliable, and improving?"

Crucially, suppose you lean into the “AI Slop” (rather than dismissing it) and build mechanisms to continuously improve the slop. In that case, the mechanisms will enable you to ship faster than the competition, with more trust and security, all while innovation grows by an order of magnitude.

Over the last couple of weeks, I have been doing this. Asking my agents to build canaries and test suites, I watched as the agents self-improved with the mechanisms correcting them. Work that would have taken weeks has now taken days, while having an acceptable level of MVP quality.

The new reality of AI coding systems

I'm going to give you an update on the current state of play. Claude Code, powered by Anthropic's latest models, can now map and understand entire codebases in seconds. Not files—codebases. It handles multi-file changes with deep project context, operates directly in your terminal, and shows its reasoning process for complex tasks. We're talking about 72.5% accuracy on the SWE-bench, which, for those keeping score at home, is better than most junior developers.

I jokingly call it an SDE0.7: it is at 70% of the mental capability of an entry-level programmer while producing code an order of magnitude faster. (The analogy is to an L4 SDE at Amazon, an entry level college hire - also called an SDE 1)

Cursor—and if you haven't tried Cursor, you're already behind—is a VS Code fork with AI so deeply integrated it's like having a senior developer looking over your shoulder 24/7. Its predictive capabilities are so good that it anticipates your following edit location. Think about that. It knows where you're going before you do.

GitHub Copilot, the granddaddy of them all, now generates over $500 million in revenue. Half a billion dollars! From an AI that writes code! And they're not stopping there. Their new coding agent can autonomously resolve issues, generate pull request summaries, and integrate with enterprise knowledge bases.

However, what should really blow your mind is that Amazon Q Developer is showing 50-60% code acceptance rates at National Australia Bank. BT Group has generated 200,000 lines of AI code across 1,200 developers. These aren't experiments. These aren't pilots. This is production code, running real systems, serving real customers.

The five pillars of AI code management

So, how do you become a Charlie Bell for AI systems? How do you evolve from code writer to AI conductor? Let me break it down into five essential mechanisms you must master.

First: Test-driven development reimagined

TDD isn't dead—it's more important than ever. But the workflow has inverted. You don't write tests to verify your code; you write tests to specify what the AI should build. Think of tests as contracts, as specifications, as the musical score that your AI orchestra will perform.

Here's how it works: You write comprehensive test suites that define business requirements. The AI generates an implementation to satisfy those specifications. You iterate, refine, and the AI refactors while maintaining test compliance. The red-green-refactor cycle that took hours now takes minutes.

However, and this is crucial, you must review those AI-generated tests for tautological thinking. An AI that generates both code and tests can create beautifully synchronized nonsense. Always, always maintain human oversight on the test logic itself.

I use Playwright to generate the tests with screenshot emission to validate what I am seeing.

Second: Canaries that actually sing

Bell's operational excellence philosophy demands gradual rollouts, and with AI-generated code, this isn't optional—it's a matter of survival. You need canary deployments that are more sophisticated than "deploy to 5% and pray."

Frankly the Playwright generated tests can act as the basis for your canary. Select a few end-to-end transaction tests, integrate them into your git commit process to begin with. Then host a basic execution engine for it against your pipeline stages.

If you ask Claude Code to build your pipeline and document it, I have noticed it remains in history. Having the canary execute on deployment will automatically prompt Claude to resolve failures. This integrated safety actually automatically resolves hallucinations.

Pro Tip: Claude Code loves to generate “fallback” or fake implementations. Always have a requirement contained within the prompts that it does not create these implementations. It seems to prioritize pleasing you (not the customer) above all else. Ensure that your prompts and requirements always contain the expected, measured customer impact. This is really the essence of my learning, Claude Code focuses on you - but the real power happens when you focus Claude Code on the customer.

Third: Product management for machines

This is where most engineers stumble. They think managing AI code is a technical problem. Wrong. It's a product problem. You need to feel like a product manager, but your product isn't software—it's the AI that creates software.

You need feature flags not just for code features, but for AI behaviors. You need A/B tests not just for user interfaces, but for AI prompting strategies. You need metrics not just for system performance, but for AI reliability.

Think about acceptance rates, hallucination frequencies, and code churn rates. At Mercedes-Benz, they're tracking not just productivity metrics but developer satisfaction with AI assistance. That's product thinking. That's what separates the engineers who will thrive from those who will merely survive.

Pro Tip: Involve Claude in your business planning. It is amazing what happens when Claude becomes a collaborative team member in building for a customer, as opposed to simply trying to make you happy. This is what I did - I fed Claude business planning documents early. Had it convert the plans into fake markdown development plans.

Pro Tip #2: Know when to use the right model. I have a developer key with Claude and a regular Claude Max subscription. Claude Max will limit access to the Opus model based on usage. I purposefully switch between Claude Max and my API key for strategic tasks, even though it will consume tokens and cost me more money

(Hi Lindsay! Sorry about those charges!)

Pro Tip #3: When you are using Anthropic’s Opus model, do not hesitate to ask Claude not only to accomplish tasks but also to review what has been built, suggest improvements, plan ahead, or conduct a competitive analysis. Don’t just use Claude to translate from a requirement to code - make it a part of the design process, just like you would an entry-level software developer.

If you are an entry-level software developer, start upleveling into becoming a manager of interns - Claude is now your intern (especially when using Opus).

If you see the message that it has switched to Sonnet, do not ask the model to do complicated tasks that require planning, thinking, and strategy. Instead, either log in with your API key and switch to Opus, or take a break.

Fourth: Verification at scale

Here's a sobering statistic: 51.24% of GPT-3.5-generated programs contain vulnerabilities. Now, before you panic, commercial models do better, with around 5.2% hallucination rates. But 5% of your codebase containing hallucinated dependencies is still 5% too much.

You need verification mechanisms that would make a Swiss watchmaker jealous. Sandboxed execution environments using microVMs. Formal verification tools, such as ESBMC, use mathematical proofs to validate correctness. Property-based testing that defines invariants your code must satisfy. Mutation testing that validates your tests actually catch bugs.

And—this is non-negotiable—you need comprehensive security scanning. Tools like Snyk's DeepCode AI, which models over 25 million data flow cases. Static analysis that runs in seconds, not minutes. Runtime monitoring that catches behavioral anomalies specific to AI patterns.

And build it into your canaries and pipelines.

Fifth: The path to becoming a mini Charlie Bell

This is the synthesis. This is where it all comes together. You must build what Bell calls "situational awareness"—the ability to understand system health at a glance while maintaining the capability for a deep dive when needed.

Implement hierarchical operational reviews. Team-level weekly reviews during on-call handoffs. Director-level reviews with incident deep-dives. And yes, company-wide operations meetings where every service, every AI component, every critical system gets scrutinized.

Yes, Claude and I have a review and check-in every day. Much like a standup. Have a standup with Claude where you ask it to not write any code. Ensure it self-documents what was discussed.

When necessary, you will have to refresh its memory. Candidly, managing your SDE 0.7s memory is going to be a critical part of the job. Guess what? This is what an SDM has to excel at anyway.

Guilty admission: I treat Claude just like I would treat another SDE - don’t treat it like a program. Yes, I talk to Claude so that it begins to understand me.

But here's the twist: Your (human) team reviews aren't just about uptime and error rates. They're about AI behavioral metrics. Model drift. Acceptance rates. Hallucination patterns. Code quality trends. You're not just operating software; you're operating the AI that creates software.

Build single-threaded ownership for AI components. One team, one AI system, continuous ownership. No "launch and flee." No, "that's the AI team's problem."

If your team uses AI to generate code, your team is responsible for that AI's behavior, performance, and improvement.

The tools of transformation.

Let me get specific about tools, because abstract philosophy without concrete implementation is just academic masturbation.

Claude Code excels at complex, multi-file operations with a deep understanding of context. Use it when you need to refactor entire systems, when you need to understand legacy codebases, and when you need AI that can reason about architectural decisions. At $200 per month, it's a bargain for the capabilities it provides.

Opus works - Sonnet… the jury is still out. Ask it to think. Document, test, document some more - and ask it to report its plan and thinking as well.

Cursor is your rapid development environment. Its predictive capabilities and intuitive interface make it perfect for the fast-paced, iterative development that AI enables. Use it for prototyping, for exploration, for those moments when you need to move from idea to implementation at the speed of thought.

GitHub Copilot is the reliable workhorse. With the broadest IDE support and most mature platform, it's ideal for teams that need consistency and enterprise features. Its new coding agent capabilities put autonomous development within reach of every team.

I really only use Copilot for low-hanging DevOps tasks. It sucks at deep thinking and actual code modification. Use it for ops tasks - not building software, in its current incarnation.

But tools are just tools. What matters is how you orchestrate them. Use Claude for complex reasoning, Cursor for rapid iteration, and Copilot for day-to-day grunt work. Layer your verification tools—Semgrep for patterns, ESBMC for formal verification, and Hypothesis for property testing.

Build defense in depth. The guardrails will cause your agents to exceed expectations.

The hard truths about AI hallucination.

Now, let's talk about the elephant in the room: hallucination. 76% of developers experience frequent AI hallucinations. Only 3.8% report both low hallucination rates and high confidence. These are not comfortable numbers.

But here's what the hand-wringers don't understand: hallucination isn't a bug to be eliminated; it's a characteristic to be managed. Just like human developers make mistakes, AI systems hallucinate. The difference is that AI hallucinations are predictable, detectable, and preventable with the proper mechanisms.

Your SDE0.7s will make more errors than your SDE1s.

However, that isn’t an excuse not to use them.

Nobody has ever invested in perfection.

They invest in an outcome.

Utilize prompt engineering techniques, such as chain-of-verification, where the AI verifies its own work. Implement "according to" prompting that grounds responses in authoritative sources. Use step-back prompting that starts with high-level thinking before implementation details.

But most importantly, never trust, always verify. Every piece of AI-generated code goes through the same rigorous pipeline: automated testing, security scanning, human review, canary deployment, and production monitoring. The AI doesn't get special treatment because it's AI.

If anything, it gets extra scrutiny. And building that scrutiny is your value.

The organizational transformation.

This isn't just about individual engineers. Entire organizations are transforming. Accenture reports 90% of developers writing better code with AI assistance. BT Group automated 12% of repetitive tasks. National Australia Bank achieved 60% code acceptance rates with customization.

But the fundamental transformation isn't in the metrics—it's in the roles. Engineers aren't becoming obsolete; they're becoming more valuable. They're moving from syntax to systems, from implementation to design, from coding to conducting.

New roles are emerging: AI Product Managers who translate capabilities into features. ML Operations Engineers who specialize in model deployment. AI Ethics Officers who ensure responsible implementation. Prompt Engineers commanding salaries from $50,000 to $335,000.

And yes, that's right—prompt engineering is a six-figure job. Because knowing how to talk to an AI, how to extract maximum value from these systems, how to prevent hallucinations, and ensure quality—these are the skills that separate the amateur from the professional.

Let me be crystal clear.

You won’t get a six-figure job knowing how to write cool text into Claude. “Prompt engineering” isn’t just about caressing GPT or Claude into producing great results. If somebody is getting paid for that, they’ll be out of a job the next time a model launches.

You will secure a six-figure job knowing how to design a system that guides Claude to delivering a critical business outcome 10 times faster.

The soundtrack to disruption.

Let me bring this back to music one more time. When GarageBand democratized music production, the professional musicians who thrived weren't the ones who complained about declining standards.

They were the ones who recognized that in a world of infinite music, curation becomes valuable. Performance becomes valuable. Connection becomes valuable. The story becomes valuable.

The same principle applies here. In a world where anyone can generate code, the valuable developers won't be the ones who can write the cleverest algorithms. They'll be the ones who can:

Understand what actually needs to be built

Design systems that scale and integrate

Create platforms others can build upon

Build communities around their solutions

Tell the story of why their software matters

Connect with users at a human level

Move fast without breaking things

Learn and iterate relentlessly

The GarageBand revolution didn't kill music. It killed the gatekeepers. It killed the artificial scarcity. It killed the "you must be this technical to participate" sign at the door.

The same thing is happening to software development. Right now. Today. And just like with music, the people who'll thrive aren't the ones protecting the old order.

They're the ones who embrace the chaos, ride the wave, and build the future.

The future is already here.

William Gibson said, “The future is already here—it's just not evenly distributed.”

With AI, as in AI-assisted development, the future is not only here, but it's accelerating at a pace that makes Moore's Law look leisurely.

By 2027, Gartner predicts 70% of developers will use AI-powered tools. I think they're being conservative. By 2027, developers who don't use AI tools will be as rare as developers who don't use version control today.

But here's the crucial insight: The developers who thrive won't be those who resist this change or those who blindly accept it. They'll be those who embrace their new role as AI conductors, applying Charlie Bell's operational excellence principles to AI systems, and building the verification and containment mechanisms that make AI-generated code safe for production.

They'll be the ones who understand that writing code was never the point. The point was building systems that solve problems, that serve users, that create value.

AI just changes how we do that.

It doesn't change why we do it.

Your mission, should you choose to accept it

So here's your homework. Here's what you need to do right now, today, before you close this article and go back to your regularly scheduled programming:

First, if you're not already using AI coding tools daily, start. Pick one—Claude, Cursor, Copilot, I don't care which—and use it for everything. Feel the friction. Understand the capabilities. Discover where it excels and where it falls short.

Pro Tip: Start with Claude.

Second, implement test-driven development with AI. Write tests first, let AI implement. See how the dynamic changes. Experience the speed of iteration. But also experience the need for vigilance, for verification, for human judgment.

Third, build your first canary deployment pipeline for AI code. Start simple—5% traffic, basic error monitoring. But make it real. Deploy AI-generated code in production, with safeguards, and observe the results.

Fourth, start thinking like a product manager for AI. Track metrics. Run experiments. Build hypotheses about what makes AI-generated code successful or problematic. Develop your intuition.

Fifth, study Charlie Bell. Read his interviews. Watch his talks. Understand that operational excellence isn't about perfect code—it's about perfect systems. And perfect systems are built through continuous improvement, not one-time brilliance.

The choice before you

Here's the bottom line, and I want you to really hear this:

The age of the individual coder is coming to an end, but the age of the software development manager is just beginning.

This isn't a demotion; it's a promotion. You're moving from laborer to conductor, from implementer to architect, from coder to creator.

The engineers who thrive in this new world won't be those with the fastest typing speed or the most obscure language knowledge. They'll be those who can orchestrate AI systems, ensure reliability at scale, and bridge the gap between AI capability and business value.

They'll be the Charlie Bells of the AI age—focused not on writing perfect code, but on building perfect systems. Systems that learn, that improve, that serve users reliably and continuously. Systems that are owned, not abandoned. Systems that exemplify operational excellence in an age of artificial intelligence.

The tools are here. The techniques are proven. The transformation is underway. The only question is: Will you be a participant or a bystander? Will you be a conductor or merely a listener? Will you shape this future or be shaped by it?

The choice, as they say, is yours.

But choose quickly.

Because the revolution isn't coming. It's here. And every day you delay is a day your competitors gain an advantage.

Now stop reading and start doing.

The future of software development is waiting, and it needs conductors, not coders. It needs Charlie Bells, not keyboard jockeys. It needs you—but only if you're ready to evolve.

The stage is set. The orchestra is tuned. The baton is in your hand.

What are you waiting for?