The LLM-API Challenge (and Opportunity)

When Silicon Valley Met Socratic Method

Did you know that when Aristotle taught Alexander the Great, he didn't hand him a manual on "How to Conquer the Known World: A Step-by-Step Guide?”

No, he taught him to think, to adapt, to question everything. That's exactly what's happening right now in software development, except instead of conquering Persia, we're teaching machines to have conversations with APIs, and frankly, it's just as revolutionary and twice as chaotic.

The integration of Large Language Models with tools and APIs isn't just another tech trend—it's the equivalent of teaching Kenny McCormick to stay alive permanently. We're dealing with fundamentally non-deterministic systems, economically driven by tokens rather than compute cycles, and capable of creative problem-solving that would make MacGyver weep with joy.

And just like South Park's treatment of contemporary issues, this transformation is simultaneously profound and completely insane.

The Great API Awakening: From Rigid Schemas to Conversational Chaos

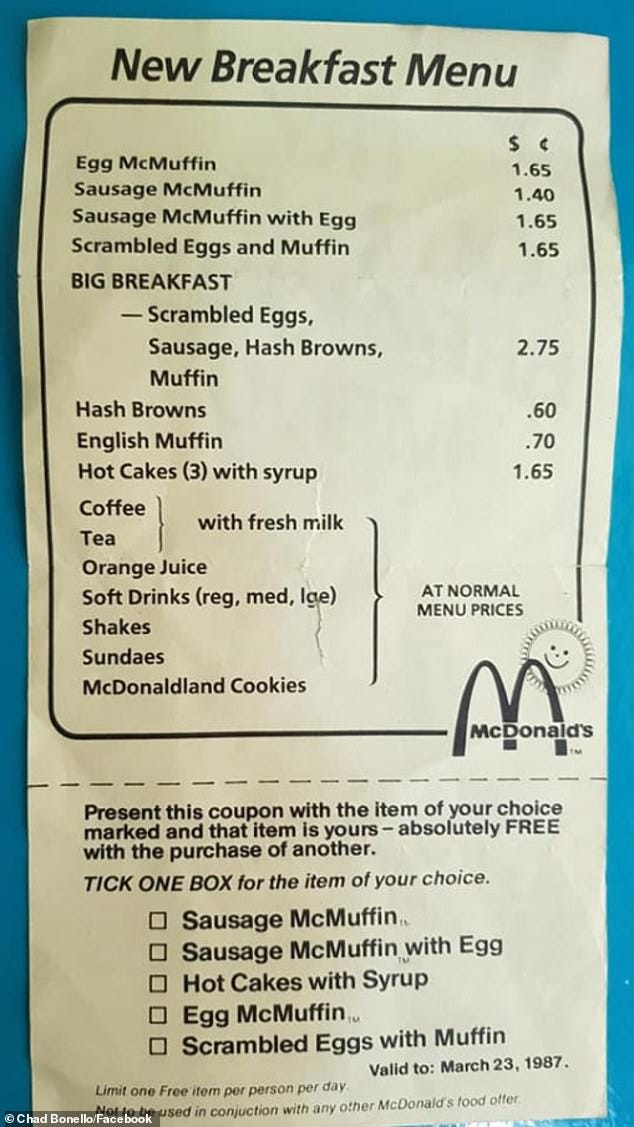

Traditional APIs were like ordering at a McDonald's in 1987—you knew exactly what was on the menu, the cashier expected specific phrases, and deviation from the script meant confusion.

Microsoft's function calling implementation changed that game entirely. Now we have "Open APIs" that are more like having a conversation with your favorite professor who happens to know everything about everything and can figure out what you need even when you're not entirely sure yourself.

These loosely typed interfaces with massive surface areas—think of them as the Wikipedia of APIs—enable LLMs to dynamically discover functionality through natural language descriptions. It's like giving Tweek Tweak a Swiss Army knife and letting him figure out that the tiny scissors can also be a screwdriver if you're creative enough, except instead of having a panic attack, the AI actually succeeds. The models interpret JSON schemas at runtime, determining when and how to call functions based on conversational context rather than rigid predetermined interactions.

But here's where it gets interesting—and by interesting, I mean "requiring entirely new architectural patterns that make distributed systems look like Lego blocks."

And these architectural patterns are going to make the creaky data estates of 20-year-old software that Fortune 500 enterprises depend on to run collapse if we’re not careful.

Knowledge Graphs Meet Vector Databases: The Academic Power Couple

You want to talk about hybrid vigor? Microsoft's GraphRAG implementation is like combining a research librarian's organizational skills with a detective's pattern recognition abilities. The system extracts entities and relationships from documents, constructs knowledge graphs, and uses hierarchical clustering algorithms—basically teaching machines to think like conspiracy theorists, but with actual facts.

This approach achieves what we call "multi-hop reasoning"—imagine Kevin Bacon's Six Degrees game, but for data points. The system can connect disparate pieces of information that traditional RAG systems would miss entirely. Studies show this reduces hallucination significantly while improving accuracy on complex aggregation queries. It's like upgrading from a filing cabinet to having a personal research assistant with an eidetic memory and the organizational skills of Marie Kondo.

I have already personally observed this with Claude Code. Just giving it a vector search database for historical conversation has made it a far more intelligent tool.

It has an organized memory of its entire conversation with the designer. No software engineer can claim this level of recall.

But—and there's always a but—structured tools with tight grammar patterns remain essential. Database queries still require exact syntax because SQL doesn't speak metaphor, API calls need strict parameter formatting because computers lack the human ability to infer "you know what I meant," and mathematical operations demand precision because two plus two equals four, not "approximately four, give or take some philosophical considerations."

And, critically, there is not a clear, easy, self-service, non-developer way to convert between the worlds yet. Most data is still locked away in formal, relational structures, with APIs that focus on reducing transaction counts and improving index utilization to reduce IOPS.

On the other hand, you have a model that wants free rein on every column, even though there are a million unindexed rows.

The Latency Labyrinth: When Speed Becomes Multidimensional

Here's something that'll keep you up at night: research from Databricks reveals that LLM inference is primarily memory-bandwidth-bound rather than compute-bound. Most deployments achieve only 50-60% of peak memory bandwidth utilization. It's like having a Ferrari with the fuel line of a lawn mower.

Time to First Token typically exceeds 500ms even for minimal input—that's longer than it takes for most people to lose interest in a conversation. Time Per Output Token significantly impacts perceived speed, creating a user experience that's somewhere between watching paint dry and waiting for dial-up internet to load a single webpage in 2003.

Traditional distributed systems paradigms fail here because they assume deterministic behavior and predictable outputs. LLMs introduce fundamental nondeterminism that breaks conventional retry and caching strategies. It's like trying to apply Newton's laws of motion to quantum mechanics—the basic assumptions don't translate. Or like expecting Randy Marsh to react consistently to any given situation—theoretically possible, but you're probably going to get surprised.

The overemphasis on latency reduction that the industry has had for the last two decades will now be surprised by users who favor intelligent responses over latency. Chatbots are teaching users that latency actually improves intelligent responses.

This behavioral change means that the entire API design and optimization paradigm of an industry is misaligned with upcoming user preferences.

The token-based pricing model creates additional complexity that would make airline pricing algorithms look straightforward. Output tokens cost approximately 3x more than input tokens, longer contexts dramatically increase costs, and production inference can dwarf experimentation costs by orders of magnitude. DoorDash's 10 billion daily predictions would cost $40M per day at typical LLM pricing. That's more than the GDP of some small nations.

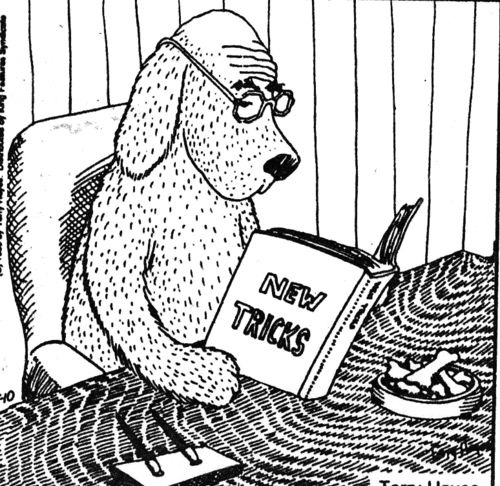

Enterprise Integration: Teaching Old Dogs Quantum Tricks

Adapting AI to legacy enterprise systems is like trying to install TikTok on a Nokia 3310—theoretically possible with enough middleware, but probably not what anyone had in mind when they designed either system. SAP's Business AI platform now offers 230+ AI-powered scenarios expanding to 400 by end of 2025, while somehow maintaining compatibility with transaction codes that predate the iPhone.

In healthcare, we're seeing AI-powered ambient scribes reduce documentation time by 75% despite integration complexity that would make Rube Goldberg proud. Epic and Cerner systems require FHIR API integration with OAuth 2.0 authentication, creating security layers that are simultaneously over-engineered and somehow still vulnerable to the digital equivalent of leaving your front door unlocked.

To meet security requirements, the closed loop of bringing data back to the client to then be sent to the LLM creates an interesting retry paradigm, by the way. LLMs love to retry and fetch different scopes of data.

The only way, currently, to achieve this is for regulated industries like healthcare to cycle the data through the user’s environment in a consistent manner.

I’m eager to see the first COE for a retry storm triggered by the LLM going down or misbehaving as users make a stream of microqueries to the source of truth. 😀

The technical challenges are like a Russian nesting doll of incompatibility. Data format mismatches between fixed-length legacy records and dynamic JSON schemas require ETL pipelines that are part software engineering, part digital archaeology. Authentication systems designed for human users struggle with AI agents requiring high-volume, parallel access patterns—it's like creating a revolving door for a stampede of caffeinated software engineers.

API limitations manifest as scalability issues where legacy systems cannot handle modern AI workloads, creating performance bottlenecks that require creative solutions involving caching layers, API wrappers, and what can only be described as "digital duct tape." Add compliance requirements like GDPR, HIPAA, and industry-specific regulations, and you've got a regulatory framework that makes tax code look simple.

Token Economics: The New Laws of Digital Physics

Current best practices for AI-friendly APIs reflect hard-won lessons from production deployments where every byte processed costs money, fundamentally changing design decisions in ways that would make efficiency experts weep with joy. PayPal's implementation of VERBOSITY parameters to control response detail exemplifies this approach—it's like having a conversation where you pay by the word, suddenly making everyone very interested in brevity.

Studies show Markdown is 15% more token-efficient than JSON, while TSV uses 50% fewer tokens. Documentation has evolved from static reference material to runtime configuration—AI processes documentation with every decision, making consistency critical. Mismatches between docs and API behavior cause production failures that are part technical error, part existential crisis.

Error handling has evolved to be self-healing, with responses including recovery suggestions, alternative endpoints, and actionable hints. Anthropic's Model Context Protocol (MCP) demonstrates successful patterns with 43% of LangSmith organizations now using MCP traces. The protocol achieves 30-minute integration times through clear server-client separation and standardized communication layers—it's like IKEA instructions, but for AI integration, and they actually make sense.

But even then, if you want the MCP server to be fully utilized, you have to create copious documentation, starting with the tool names.

Tools have to be small enough to deliver intelligence while large enough to help the model achieve business objectives. Variables need to be flexible to support the broad set of use cases a customer will drive through a chatbot.

You want API reflection like SOAP for the documentation, while still supporting void* | any | object parameters.

MCP tool design does not mean just replicating your RESTful pattern in MCP.

Success Patterns: The Digital DNA of Effective Integration

Analysis of successful LLM tool integrations reveals architectural patterns more consistent than human behavior. OpenAI's function calling architecture uses structured JSON schemas that models interpret to generate function arguments iteratively—think of it as a very polite, brilliant intern who always asks clarifying questions before proceeding.

LangChain's growth metrics—220% increase in GitHub stars and 300% increase in downloads—demonstrate the value of modular architectures enabling rapid composition. 40% of LangChain users integrate with vector databases, while 35-45% report increased resolution rates using multi-agent systems. It's like building with digital Lego blocks, if Lego blocks could think and occasionally argue with each other about the best approach to problem-solving—imagine the boys from South Park trying to build something together, but they're all actually competent.

Key success factors include explicit, detailed function schemas that reduce misinterpretation (think diplomatic cables rather than text messages), comprehensive error handling with recovery paths, and modular architectures supporting rapid experimentation. Google's Agent2Agent (A2A) protocol vision extends this further, proposing intelligent endpoints that adapt like consumers—imagine if your coffee machine could negotiate with your alarm clock about optimal wake-up times based on your sleep patterns and schedule.

Retrofitting Challenges: Digital Archaeology Meets Modern Needs

Technical challenges when retrofitting old systems for AI support follow patterns more predictable than reality TV plot lines. Legacy banking systems built on COBOL require middleware that's part translation service, part digital séance to connect with modern AI services. Healthcare systems struggle with interoperability despite FHIR standards—it's like everyone agreeing to speak English but using completely different dictionaries.

But to truly leverage the value of AI, enterprises are going to have to add a layer of data access and actions on top of legacy, mission-critical systems.

And no, Databricks, it isn’t just a layer around a lake. Correlated actions are part of the user experience, and object correlation is hard enough before you add a lake abstraction; you are asking the LLM to marry IDs across systems to take potentially one-way door actions.

Manufacturing systems face real-time processing demands that legacy architectures handle about as well as a horse handles highway traffic. Successful implementations require phased migration strategies, API-first architectures using RESTful services, and data preparation that's part cleaning, part digital exorcism.

Keller Williams connected legacy systems through middleware and APIs to power their AI CRM and personal assistant. Bank of America's API-first strategy resulted in 28% increased digital engagement and 12% improvement in customer retention. These successes required addressing fundamental incompatibilities between fixed-length records and dynamic schemas, outdated security protocols lacking modern encryption, and performance bottlenecks under AI workloads.

The Great API Paradigm Shift: From Rigid Contracts to Conversational Agreements

The evolution from traditional REST/SOAP APIs to AI-friendly patterns represents more than technical updates—it's like the difference between formal diplomatic protocols and actual human conversation. Traditional APIs assumed fixed schemas, human-centric design with visual interfaces, deterministic contracts, and sequential processing. AI-native patterns embrace ambiguity tolerance, context-aware responses, parallel processing, and self-healing capabilities. It's the difference between speaking like Mr. Mackey ("APIs are bad, mkay?") and having an actual, nuanced conversation about system architecture.

GraphQL has emerged as particularly well-suited for AI systems, enabling selective data fetching that reduces token costs, supporting nested queries for complex relationships, and providing schema introspection for dynamic operation discovery. It's like giving AI systems the ability to order à la carte instead of being forced to choose from predetermined combo meals.

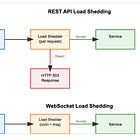

Event-driven architectures show similar promise: AsyncAPI achieved 17 million specification downloads in 2023 (up from 5 million in 2022), demonstrating rapid adoption for real-time AI processing needs. Streaming APIs and WebSockets maintain engagement during long operations, enable progressive response building, and support bidirectional communication for clarification mid-process, like having a conversation that can pause, rewind, and fast-forward as needed.

Rate Limiting: Teaching Traffic Laws to Digital Chaos

Traditional rate limiting for AI systems is like using horse-and-buggy traffic laws for Formula 1 racing—the basic concepts apply, but the implementation needs serious updating. AI systems exhibit bursty traffic patterns, high-volume legitimate usage that mimics DDoS attacks, and unpredictable behavior patterns that would confuse traditional security systems.

Modern LLM APIs implement multi-dimensional limits across requests per minute, tokens per minute or day, and concurrent request counts. Adaptive Rate Limiting approaches use dynamic quotas that adjust in real-time, ML-based anomaly detection to distinguish legitimate AI traffic from bots, and predictive analytics for demand forecasting.

It's like having a bouncer who's also a statistician and a mind reader.

Stripe's multi-tiered approach demonstrates sophisticated backpressure through load shedding that discards lower-priority requests during overload, traffic categorization that prioritizes critical methods over test traffic, and gradual recovery that slowly increases capacity as systems stabilize. Research shows continuous batching achieves 10-20x better throughput than dynamic batching by grouping requests at the token generation level, efficiently scheduling memory use across varying sequence lengths, and balancing latency-throughput tradeoffs for different use cases.

One concerning habit we’re already seeing is API designers introducing API captchas to deprioritize “bot traffic.” Well, very shortly, dude, that “bot” is actually an LLM-powered user experience trying to use your API.

How are we going to detect a bot with a human behind it? How are we going to detect an LLM that can beat traditional captchas? I don’t see any good answers here.