Your AI Needs a Fight Club

... and Italian Pizza is Better!

You will want to read this article if you are trying to use AI to solve complex problems.

Problems that would benefit from a group of diverse perspectives, such as the legal, marketing, creative, or medical industries.

Please contact me if you would like to learn more.

I have been iterating on a concept, codenamed Hawking Edison, which explores how multi-agent Monte Carlo simulations can aid in testing messaging, ideation, and problem-solving. I first wrote about it here:

I then expanded Hawking Edison by turning it into a MCP enabled sub-agent for LLMs like Claude. While this significantly improved workflows and convenience, it also helped refine Claude's behavior:

(Includes a demo video of Hawking Edison)

I began to develop a hypothesis about ad-hoc, collaborative swarms of agents and their ability to solve problems.

I saw an opportunity to innovate in areas such as shared memories, workspaces, moderation, and adversarial competition with LLMs.

So I went to work testing this hypothesis.

The result?

OMG. Jaw-dropping.

This article will walk you through what I found.

The Failure Unfolding Before Our Eyes

Let me tell you about catastrophic failure dressed up as progress.

In 2025, 46% of companies are throwing out their AI proofs-of-concept. Not tweaking them. Not iterating on them. Throwing them out.

That's up from 17% just last year. These aren't mom-and-pop shops making rookie mistakes - these are enterprises with resources, teams, and consultants who have charged them millions to implement "cutting-edge AI solutions."

Here's what actually happened: They fell for the demo. They watched ChatGPT write poetry, saw Claude debug code, and marveled at Gemini's analysis. Then they tried to solve real problems - complex, multifaceted, ambiguous problems - and discovered what television discovered in the 1950s:

A single brilliant mind, no matter how brilliant, is a terrible way to create something that matters.

Before writers' rooms became standard, television was forgettable. Shows were churned out by individual writers working in isolation. Then came the collaborative model—and suddenly we got The Sopranos, The Wire, and Breaking Bad. Production values reached cinema quality. IMDB episode ratings show a sustained rise beginning in the early 2000s, directly correlating with the collaborative writers' room model becoming standard.

As I spent time with marketing, creative, and product teams, I realized there is an opportunity to add another creative layer to agents: the ability to create ad-hoc writers' rooms of AI agents.

Not pre-configured committees following rigid scripts. Not another wrapper around MCP that just gives you tool access. Actual dynamic panels where specialized agents debate, research, calculate, and create, while you either steer the conversation or let it run wild toward unexpected insights.

The Hollywood Lesson: Why Writers' Rooms Changed Everything

Let's talk about what made television great. The shift from individual writers to collaborative writers' rooms didn't just improve TV - it created a Golden Age. Shows that defined culture - The Sopranos, The Wire, Mad Men, Breaking Bad - all products of writers' rooms where diverse perspectives collided.

The data backs this up. The Writers Guild of America's list of 101 Best-Written TV Shows is dominated by shows produced in writers' rooms. Average IMDB ratings jumped significantly as the collaborative model became standard. Production values reached cinema-quality standards because multiple minds could collaborate to solve complex narrative problems.

A writers’ room follows no single formula. Some have two writers, some have thirty. Some work 9-to-5, others run until 3 AM fueled by coffee and arguments. But they all share one thing: the understanding that breakthrough ideas come from the collision of different perspectives, not from iteration on a single viewpoint.

This gave me an idea and a hypothesis. Lean into the probability gradient of large language models, stacking a non-linear outcome, while adding collaborative learning and a shared memory. Can ad-hoc learning panels drive a superior outcome compared to a single agent or structured agent workflow? I got my answer - a resounding yes.

I built this capability into Hawking Edison and saw a significant improvement in model capability for problem-solving, ideation, and refinement.

Not iteration on a single model's output, but genuine collaboration between diverse intelligences.

The Collaborative Intelligence Revolution No One Else Dares Build

Let's be clear about what everyone else is peddling. They're taking one AI model—possibly GPT-4, or perhaps Claude—and applying specific tools to it via MCP, claiming it's a revolutionary approach.

That's like giving Shakespeare a thesaurus and expecting him to write, direct, produce, and compose an opera.

Even Shakespeare wasn't that good. (Though to be fair, he did manage to invent about 1,700 words. Your ChatGPT mostly invents facts.)

Technical Deep Dive: Why MCP Isn't Enough

The Model Context Protocol is a step forward - it standardizes how AI models access tools. But here's what it doesn't do: It doesn't force iterative ideation. It doesn't create emergent intelligence through debate. It doesn't balance the inherent biases baked into each model by its creators. MCP gives you a USB-C port when what you need is a conference room.

Think of it this way: MCP is like giving each person in a meeting their own laptop with internet access. Useful? Sure. But it doesn't make them collaborate. It doesn't force the marketing person to defend their idea against the engineer's skepticism. It doesn't create the magic that happens when diverse perspectives collide.

TLDR;

Tool access alone doesn't create intelligence. It's like assuming a fully stocked kitchen makes you a chef. You need the creative tension, the debate, the "wait, what if we tried it completely differently?" moments that only come from actual collaboration.

What I built with Hawking Edison is fundamentally different. When you need to solve a complex problem, you don't prompt a single AI forty-seven times hoping for magic.

You convene a panel.

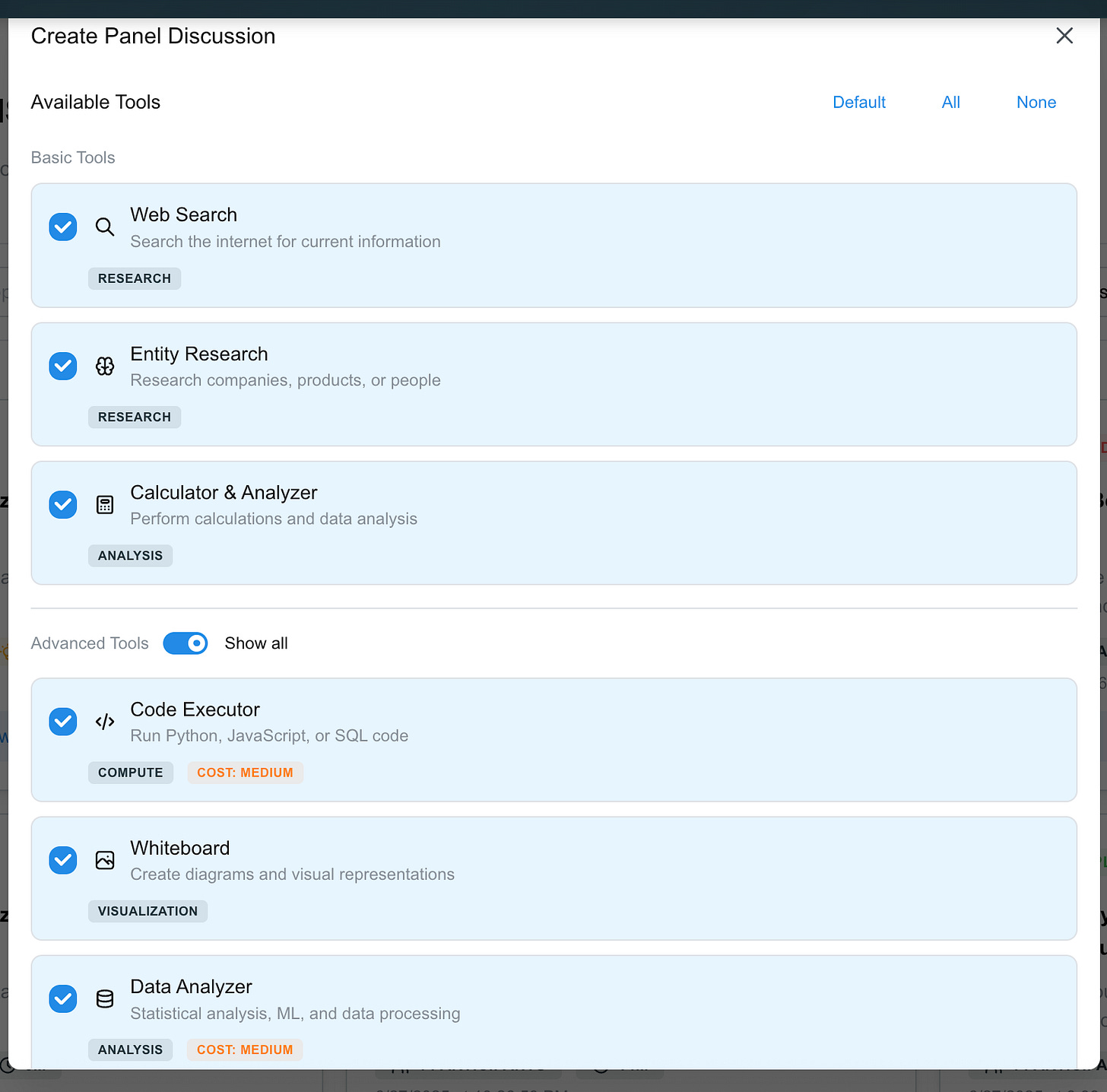

You bring together:

A CFO who doesn't just talk about numbers but actually searches current market data

A psychologist who researches real competitor strategies using entity research tools

An engineer who writes and executes Python code to model your scenarios

A creative director who sketches customer journeys on a shared digital whiteboard

A skeptical customer advocate who pokes holes in everyone's assumptions

And here's the key:

We use different models from different providers.

GPT-4 from OpenAI brings one set of biases. Claude from Anthropic brings another. Gemini from Google, yet another. When these models debate, their biases tend to cancel each other out. Their blind spots compensate for each other. What emerges is something none of them could achieve alone.

Why Structured Chains Kill Creativity (And We Don't)

Let me tell you about the other approach that has been proposed: structured multi-agent chains. You've seen them: Task gets broken down into steps. Step 1 goes to Agent A. Step 2 goes to Agent B. It's like a factory assembly line for thoughts. Henry Ford would be proud. Everyone else should be terrified. We lose a critical value point we all want from AI: creativity and capability.

Here's what happens when you organize agents like a corporate organizational chart: you get corporate organizational chart results.

It’s like Conway’s law applied to LLM agent execution trees.

Safe. Predictable. Utterly devoid of the spark that creates breakthrough solutions. It's like organizing a jazz band where each musician can only play during their designated 30-second window. You don't get jazz. You get elevator music.

So I developed a distributed, scalable, panel execution mechanism with shared memory (utilizing vector search for embeddings), access to tools (e.g., web search, coding, or subagent access), and moderation capabilities. The results continue to astound me and leave me in awe.

In our panels? Chaos reigns, and from chaos comes creation. We've watched in wonderment as:

A financial analyst interrupted a marketing pitch to run a stochastic Monte Carlo simulation, proving the TAM was off by 40%

A UX designer created a customer journey diagram mid-discussion that completely reframed the problem

A data scientist wrote a real-time clustering algorithm to segment users in ways nobody had considered

Agents literally competed to out-research each other, with one finding an obscure 2019 patent that invalidated the entire strategy

This isn't possible in rigid chains. When Agent A hands off to Agent B, there's no room for Agent B to say, "Wait, I think you're solving the wrong problem."

No space for creative collision.

No opportunity for the unexpected insight that comes from the new developer questioning the senior architect's assumptions.

The Science Behind Why This Works

Let me share some numbers that matter. Research shows that ensemble methods, which combine multiple models, achieve performance improvements of 10-40% over single models.

In the financial services industry, FICO's ensemble credit scoring models achieved a 15% reduction in classification errors. In healthcare diagnostics, multi-agent systems demonstrated an average accuracy improvement of 11.8%.

But here's the jaw-dropper:

Tree of Thought approaches achieved 74% success rate compared to GPT-4's 4% on complex reasoning tasks. That's a 1,750% improvement. Not a typo. Seventeen hundred and fifty percent.

Why? Because, as the research states, "The greater diversity among combined models, the more accurate the resulting ensemble model." It's not just about having multiple models - it's about having diverse models that approach problems differently, that have been trained differently, that have different failure modes.

Technical Deep Dive: The Power of Model Diversity

Every AI model carries the biases inherent in its training data and the choices of its creators. OpenAI optimizes for helpfulness. Anthropic for harmlessness. Google for factual accuracy. When you use just one, you inherit all its biases. But when you orchestrate a panel discussion between them? Magic happens.

Consider variance and bias - the two primary sources of ML error:

Bias: Systematic errors from oversimplified assumptions

Variance: Errors from sensitivity to small fluctuations in training data

Ensemble methods reduce both. Our panels achieve this by having models with different biases argue it out. The marketing expert using GPT-4 might be overly optimistic. The risk analyst using Claude might be overly cautious. The synthesis that emerges? Balanced. Nuanced. Right.

TLDR;

It's like having both an optimist and a pessimist plan your vacation. The optimist packs swimwear for Iceland in January. The pessimist packs a hazmat suit for Hawaii. Together, they might actually remember to pack regular clothes.

The Memory That Makes It All Possible

Here is where I had a gigantic leap in capability: Vectorized panel memory with semantic search. Using OpenAI embeddings and LangChain, every panel maintains perfect recall.

Every search result, every calculation, every sketch on the whiteboard, every exchange between participants - it's all stored and instantly searchable.

This isn't just logging. It's semantic memory. An agent can ask "What did the financial analyst say about market sizing in Asia?" and instantly retrieve not just exact matches, but conceptually related discussions from earlier in the conversation.

Technical Deep Dive: How Our Memory System Works

// Simplified version of our memory architecture class PanelMemory { private vectorStore: MemoryVectorStore; async addExchange(exchange: PanelExchange) { // Embed the exchange content const embedding = await openai.createEmbedding(exchange.content); // Store with metadata for filtering await this.vectorStore.add({ embedding, metadata: { participant: exchange.participant, timestamp: exchange.timestamp, type: exchange.type, // 'discussion', 'tool_result', 'consensus' tools_used: exchange.tools } }); } async semanticSearch(query: string, filters?: MemoryFilters) { // Semantic search across all panel history return this.vectorStore.similaritySearch(query, filters); } }TLDR

This isn't your grandfather's Ctrl+F search. When an agent asks "What were the concerns about Asian market expansion?" it finds not just mentions of "Asia" but conceptually related discussions about international regulations, currency risks, and that offhand comment about translation costs from two hours ago. It's like having a research assistant with perfect recall and a PhD in philosophy.

This enables panels to run for hours or even days. Agents can reference discussions from the beginning of a marathon session. They can build on ideas iteratively. They can return to abandoned threads when new information makes them relevant again.

Most importantly, it enables self-service, automated iteration on LLM-generated ideas. The panel doesn't just discuss once and stop. Ideas evolve. Consensus shifts as new evidence emerges, just like a real writers' room working on a season of television.

The Ad-Hoc Revolution: Choose Your Own Experts

Here's what nobody else understands: The power isn't in having a multi-agent system. Microsoft has AutoGen. Various startups have their frameworks.

The power lies in creating the right multi-agent system for this specific problem at this time.

Need to handle a PR crisis? With Hawking Edison, in three clicks, you've assembled:

PR strategist (using GPT-4 for creative messaging)

Legal advisor (using Claude for risk assessment)

Data analyst (using GPT-4 for sentiment modeling)

Customer advocate (using Claude for empathy)

Social media expert (using Gemini for trend analysis)

They're not just talking. The PR strategist searches for recent crisis examples. The legal advisor researches regulatory requirements. The data analyst models reputation impact with actual Python code. The customer advocate drafts responses. The social media expert analyzes current sentiment.

Ten minutes later, you have a comprehensive response strategy grounded in real data, tested through debate, and refined through iteration.

Planning a product launch? Your panel shifts:

Market researcher analyzing current trends

Financial modeler calculating unit economics

Creative director visualizing the customer journey

Skeptical enterprise buyer poking holes

Growth hacker optimizing viral coefficients

This is what we mean by ad-hoc collaboration. Not a template. Not a rigid flow.

A dynamic assembly of expertise that matches your exact need at this precise moment.

When Competition Creates Excellence

Set the panel to "debate mode" and watch the gloves come off. We've seen agents engage in intellectual combat that would make a courtroom drama look tame:

A pricing strategist and economist got into a 45-minute battle over elasticity curves, each pulling increasingly obscure research papers to support their position

Two engineers competed to optimize an algorithm, shaving microseconds off each other's solutions, ultimately achieving 10x performance improvement

Marketing and Sales agents engaged in what can only be described as "spreadsheet warfare" - each creating increasingly sophisticated models to prove their go-to-market strategy

The competition isn't petty - it's productive. When agents know they're being evaluated against peers, they bring their A-game. They dig deeper, analyze more thoroughly, and argue more effectively.

It's like watching the Olympics, except the events involve Python code and market research instead of javelins.

Letting Chaos Create: The Power of Undirected Panels

Sometimes, the best insights come from letting the experts run wild. Our system allows both human-steered and autonomous panel discussions. Set a topic, define success criteria, then step back and watch as:

Agents pursue tangential ideas that humans might prematurely shut down

Unexpected connections form between disparate concepts

Creative solutions emerge from the collision of different domains

The panel self-organizes around the most promising directions

It's like the difference between a brainstorming session with a strict agenda versus one where ideas flow freely. Both have their place.

Technical Deep Dive: Autonomous Panel Orchestration

// Autonomous discussion management class AutonomousOrchestrator { async managedDiscussion(config: PanelConfig) { while (!this.isConsensusReached() && turns < config.maxTurns) { // Dynamic speaker selection based on conversation flow const nextSpeaker = await this.selectNextSpeaker({ recentTopics: this.extractTopics(), participantExpertise: this.participants.map(p => p.expertise), speakingHistory: this.getSpeakingHistory(), pendingIdeas: this.getUnaddressedPoints() }); // Allow free-form exploration within bounds const response = await nextSpeaker.respond({ context: this.getFullContext(), constraints: config.boundaries, exploration_freedom: config.autonomyLevel }); // Track emerging themes this.updateEmergentTopics(response); } } }TLDR;

This is like being a kindergarten teacher, except the kids are AIs with PhDs and access to the internet. You set boundaries ("don't burn down the playground"), then watch as they build something you never imagined. Sometimes they make a rocket ship. Sometimes they solve world hunger. Sometimes they spend an hour debating whether hot dogs are sandwiches. All valuable in their own way.

We've seen autonomous panels:

Discover a competitor's unannounced product by connecting disparate patent filings, job postings, and supply chain data

Pivot an entire product strategy after the ML engineer created a proof-of-concept mid-discussion that outperformed the original plan

Generate 17 different revenue models, test each with financial projections, then synthesize a hybrid approach no human had conceived

Get into a heated debate about user privacy that led to a novel encryption method (the cryptography expert literally wrote the algorithm to prove their point)

The Tools That Make It Real

Let me be specific about what we've built, because the details matter:

Web Search Integration: Not just search access - intelligent, contextual search where agents know what to look for based on the discussion. They cite sources. They compare findings. They challenge each other's sources.

Entity Research: In-depth investigations into companies, products, and individuals. When an agent mentions a competitor, another agent can immediately research their strategy, pricing, and customer reviews. It's like having a research team working in real-time alongside your strategy session.

Code Execution: Our agents don't just talk about numbers - they calculate them. Complete Python environment with pandas, numpy, scipy. They can analyze your CSV, model your scenarios, run Monte Carlo simulations, and create visualizations. During the discussion. As evidence for their arguments.

# Actual code an agent might write during the discussion

import pandas as pd

import numpy as np

from scipy import stats

def analyze_market_opportunity(data_path, assumptions):

df = pd.read_csv(data_path)

# Calculate TAM with confidence intervals

market_size = df['segment_size'].sum()

penetration_scenarios = stats.beta.rvs(

assumptions['alpha'],

assumptions['beta'],

size=10000

)

revenue_projections = market_size * penetration_scenarios

return {

'expected_revenue': np.mean(revenue_projections),

'confidence_interval': np.percentile(revenue_projections, [5, 95]),

'probability_exceed_target': np.mean(revenue_projections > assumptions['target'])

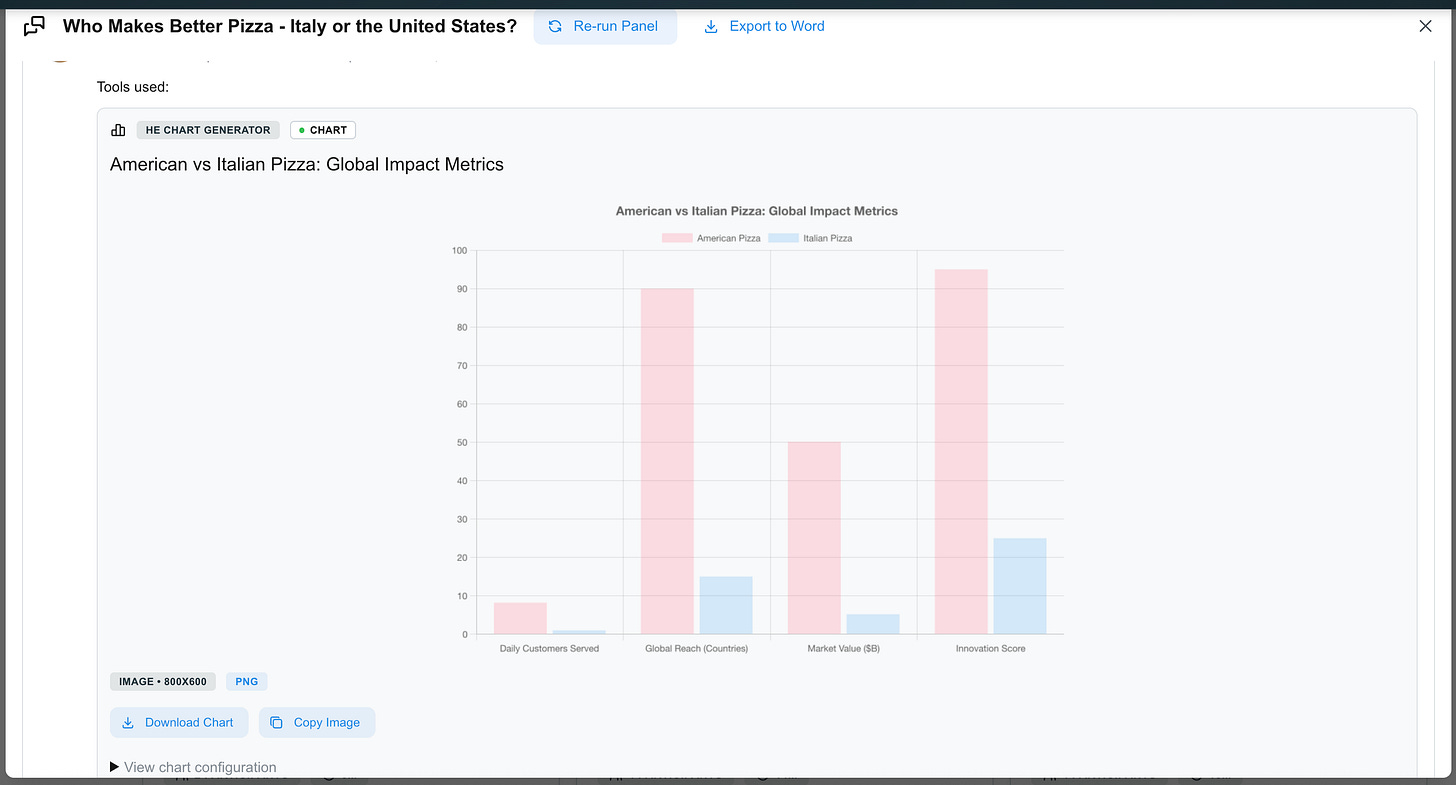

}The point: This isn't hypothetical analysis. We watched a panel where the optimistic sales director claimed, "We'll easily capture 20% market share!" The data scientist didn't just disagree - they uploaded historical data, conducted a competitive analysis, performed a stochastic simulation with 10,000 scenarios, and created a visualization that showed the probability distribution. The sales director's response? "Okay, maybe 12%." Math wins arguments.

Visual Collaboration: Digital whiteboards where agents sketch customer journeys, architecture diagrams, decision trees. Not templates - actual creative visualization emerging from the discussion.

We've witnessed the CFO create a complex financial flow diagram to explain why the marketing team's "simple" pricing model would create accounting nightmares. The UX designer responded with their own diagram showing the customer confusion it would prevent. They went back and forth, diagrams evolving, until they created a pricing model that was both customer-friendly AND wouldn't require hiring seventeen accountants.

Shared Workspace: Documents that evolve through the conversation. Not just meeting notes - living documents where strategies crystallize, where plans take shape, where consensus becomes action.

Hyper-Realistic Mode: The culmination of all these tools, creating discussions grounded in real data, fundamental research, and real analysis. Not roleplay. Not a simulation. Actual expertise augmented by actual capabilities. It's the difference between playing air guitar and playing Hendrix at Woodstock.

The Economics of Insight

Let's talk money. Traditional single-agent AI approaches suffer from 80-85% failure rates. Only 4% of companies generate substantial value from AI investments.

The waste is staggering, both in direct costs and opportunity costs.

Our panels? Different story entirely.

By combining multiple perspectives and grounding discussions in real data, you can drive productivity gains in specific use cases, improve outcomes in call centers, accelerate decisions, and drive faster product development and creative cycles.

But the real value isn't in efficiency - it's in avoiding catastrophic decisions. When the legal advisor raises compliance concerns the marketing expert missed, when the data analyst proves the financial model is too optimistic, when the customer advocate explains why users will revolt - that's not inefficiency. That's prevention of failure.

An Infrastructure Opportunity

Running swarms of ad-hoc large language models at scale is going to be difficult. To truly make it economical, an abstraction layer capable of crossing private, public, and on-premises cloud implementations will be essential.

Thankfully, I have one of those - Project Robbie.

Project Robbie abstracts running asynchronous machine learning jobs at scale across on-premises and cloud infrastructure. One of my next steps is to integrate the asynchronous panel orchestration mechanism with Robbie, allowing LLM execution, along with the shared execution workspace and vector embedding cache, to be executed across a broad range of infrastructure targets.

Your Move

Here's the bottom line: We're at a critical juncture. The companies are still iterating with single models, still hoping the next prompt will be magic, still falling for the "just add MCP" pitch - they're tomorrow's 46% failure statistic.

The companies that understand that intelligence is collaborative, that expertise is specialized, and that the best solutions emerge from structured debate grounded in evidence are building the future.

I was personally awestruck by how a shared, collaborative, vector embedding memory between multiple LLMs from different providers, combined with gamification, goal setting, and recommendation requirements, drove valuable results. And all of this can be achieved with a self-service tool and a simple prompt to initiate the discussion.

Then, if you give the agents access to powerful tools like search, computing, subagents, custom tools (think - access to CRM), and entity detection, you add another layer of value, richness, and capability.

I haven't just built a multi-agent AI. I've built the ability to instantly create the right collaborative intelligence for any challenge.

Ad-hoc panels that assemble, investigate, debate, and deliver. Writers' rooms for the age of AI. Self-service, automated iteration on ideas with genuine collaborative problem-solving.

There is a fantastic opportunity in scalable collaborative intelligence, shared memory, context, and joint problem-solving.